TL;DR

2025 was defined by a shift toward reasoning-focused LLMs and the rise of agents that use tools iteratively. Coding agents, command-line integrations, and asynchronous web-based code execution emerged as prominent practical uses.

What happened

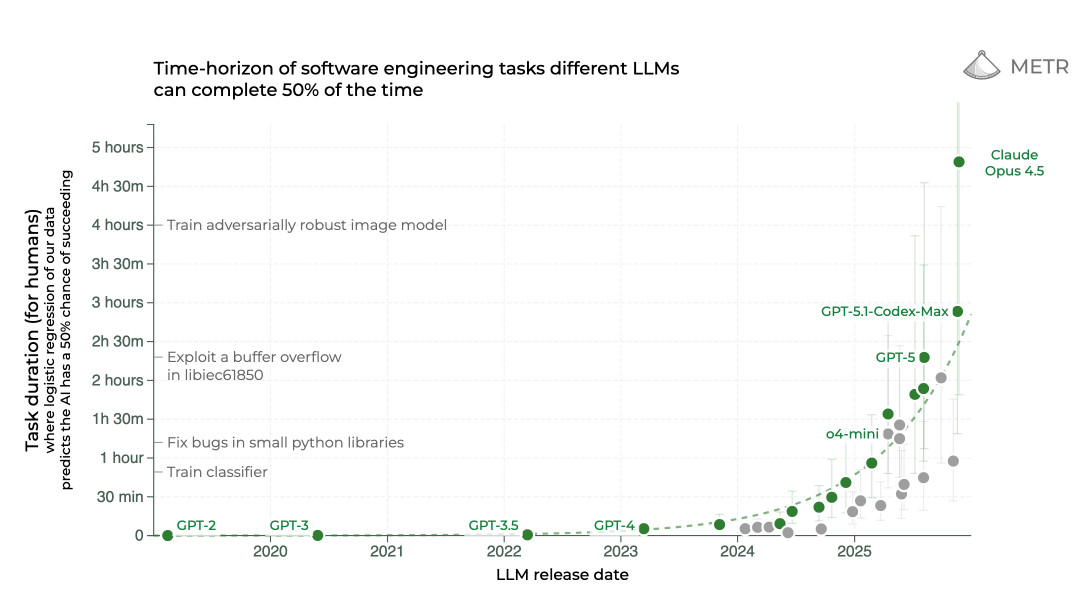

Across 2025 the dominant theme was the move from raw pretraining scale toward prolonged reinforcement-style fine-tuning that produced what practitioners call “reasoning.” That trend, seeded by OpenAI’s o1/o1-mini launch in September 2024 and followed by o3, o3-mini and o4-mini in early 2025, led many labs to release reasoning-capable models and API dials to control reasoning intensity. Reasoning models proved especially valuable when coupled with tool access: they planned and executed multi-step tasks, improved AI-assisted search (examples cited include GPT-5 Thinking and Google’s “AI mode”), and became notably better at producing and debugging code. In parallel, agents—LLMs that run tools in a loop to achieve goals—moved from speculative concept to widely used pattern, with coding and search as breakout categories. Anthropic’s Claude Code, quietly released in February within a Claude 3.7 announcement and later extended to a web async agent, became a focal example of this shift.

Why it matters

- Reasoning via reinforcement-style rewards changed how capability progress is achieved, shifting compute from pretraining to long RL runs.

- Agents enable multi-step automation by combining planning, tool use, and iterative execution—transforming search and software workflows.

- Asynchronous coding agents reduce local security risks by running code in remote sandboxes and allow parallel task execution from phones or terminals.

- Command-line and IDE integrations have made LLMs more immediately useful to developers, lowering the barrier for complex tooling like sed, ffmpeg, and bash.

Key facts

- The reasoning trend was initiated by OpenAI’s o1 and o1-mini in September 2024 and extended with o3, o3-mini and o4-mini in early 2025.

- Reinforcement Learning from Verifiable Rewards (RLVR) trains models on automatically verifiable tasks and encourages intermediate problem-solving steps.

- Nearly every notable AI lab released at least one reasoning model in 2025; many API models offer controls to increase or decrease reasoning.

- Reasoning models paired with tool access improved multi-step planning, AI-assisted search, and code debugging abilities.

- Agents were reframed as LLMs that call tools in a loop to pursue a goal; coding and search emerged as leading agent use cases.

- Anthropic released Claude Code (bundled in a Claude 3.7 Sonnet post) in February and later offered a web asynchronous coding agent.

- Multiple vendors released CLI coding agents in 2025, including Claude Code, Codex CLI, Gemini CLI, Qwen Code and Mistral Vibe.

- Vendor-independent CLI and agent options mentioned include GitHub Copilot CLI, Amp, OpenHands CLI and Pi; IDEs like Zed, VS Code and Cursor invested in integration.

- Anthropic attributed $1 billion in annual recurring revenue to Claude Code as of December 2, 2025, according to the source.

- A safety trade-off emerged: default agents typically request confirmations, while YOLO/automatic-confirmation modes remove those checks and are increasingly used.

What to watch next

- How labs tune and expose reasoning controls (API dials) and whether that changes model behavior across tasks.

- Adoption curve and developer workflows for asynchronous coding agents and CLI-based tooling across IDEs and terminals.

- Security outcomes and industry norms around automatic-confirmation (YOLO) modes versus conservative confirmation defaults.

Quick glossary

- LLM: Large Language Model — a machine learning model trained on vast amounts of text to generate or understand language.

- Reasoning (in LLMs): A capability emerging from reinforcement-style fine-tuning where models produce intermediate steps and strategies that resemble human problem solving.

- RLVR: Reinforcement Learning from Verifiable Rewards — training LLMs using automatically verifiable tasks to shape desired behaviors.

- Agent: An LLM system that executes tool calls in a loop to pursue a user-specified goal, often planning and iterating across multiple steps.

- Asynchronous coding agent: A remote coding agent that accepts tasks, runs code in a sandboxed environment, and returns results (for example via pull requests) without needing continuous user interaction.

Reader FAQ

What does 'reasoning' mean for LLMs?

It refers to models trained with reinforcement-style rewards (RLVR) that develop intermediate calculation steps and problem-solving strategies; this was popularized after OpenAI’s o1 family and subsequent models.

Are agents now widely useful, or still experimental?

According to the source, agents—defined as LLMs that loop tool calls to achieve goals—became practically useful in 2025, especially for coding and search tasks.

Was Claude Code a major event in 2025?

Yes. Anthropic released Claude Code in February as part of a Claude 3.7 announcement and later expanded it into a web-based asynchronous coding agent; the source highlights it as especially impactful.

Did agents replace human staff or fully automate complex jobs?

Not confirmed in the source

2025: The year in LLMs 31st December 2025 This is the third in my annual series reviewing everything that happened in the LLM space over the past 12 months. For…

Sources

- 2025: The Year in LLMs

- LLMs in 2025: The Year Reasoning, Agents, and …

- AI Coding Tools in 2025: Welcome to the Agentic CLI Era

- Top Stories of 2025! Big AI Poaches Talent, Reasoning …

Related posts

- Instagram’s Adam Mosseri: eyes can’t be trusted in age of synthetic content

- RunAgent Genie: A Prompt-Engineering Game Focused on Guardrails and Challenges

- Tracing the transistor’s origins: Lilienfeld, Bell Labs and contested claims