TL;DR

A long-running observer of poetry and AI examines experiments by Gwern and the startup Mercor on whether large language models can produce genuinely great poetry. Technical skill in rhyme, meter and metaphor has advanced, but questions remain about whether models can achieve the cultural resonance the author associates with greatness.

What happened

Hollis Robbins surveys recent work probing whether large language models (LLMs) can produce poetry that rises beyond technical competence to what she calls "greatness" — poetry that is both particular and universal. She highlights Gwern’s iterative, multi-stage prompting experiments: completing a William Empson fragment, composing Pindaric odes about laboratory animals, and using stacked model roles (brainstorming, drafting, critique, curation) to refine output. Robbins summarizes Gwern’s observation that early models either ignored instructions or produced odd outputs, later models became obedient but bland after RLHF, and more recent developments (chain-of-thought reasoning, capability scaling, and rubric-style training) restored creative variety. She also reports on Mercor, where the CEO says the company hires top poets and pays them roughly $150 an hour to create evaluation data and examples to teach models poetic behavior. Robbins concludes that technique is strong but doubts remain about whether models can replicate the cultural embedding that makes poetry "great."

Why it matters

- LLMs can now reliably generate formal features of verse (rhyme, meter, stanza), shifting the debate from capability to value and cultural resonance.

- Prompt engineering and multi-model workflows (different models matched to brainstorming, critique, curation) replicate some human editorial practices and scale poetic production.

- Human poets are being paid to train and evaluate models, indicating industry demand for expert judgment in shaping AI creative behavior.

- If culture and historical embeddedness are prerequisites for poetic greatness, current model training (even on vast corpora) may not suffice to produce universally resonant work.

Key facts

- Robbins has tracked LLMs and poetry for more than five years and distinguishes technical ability from "greatness."

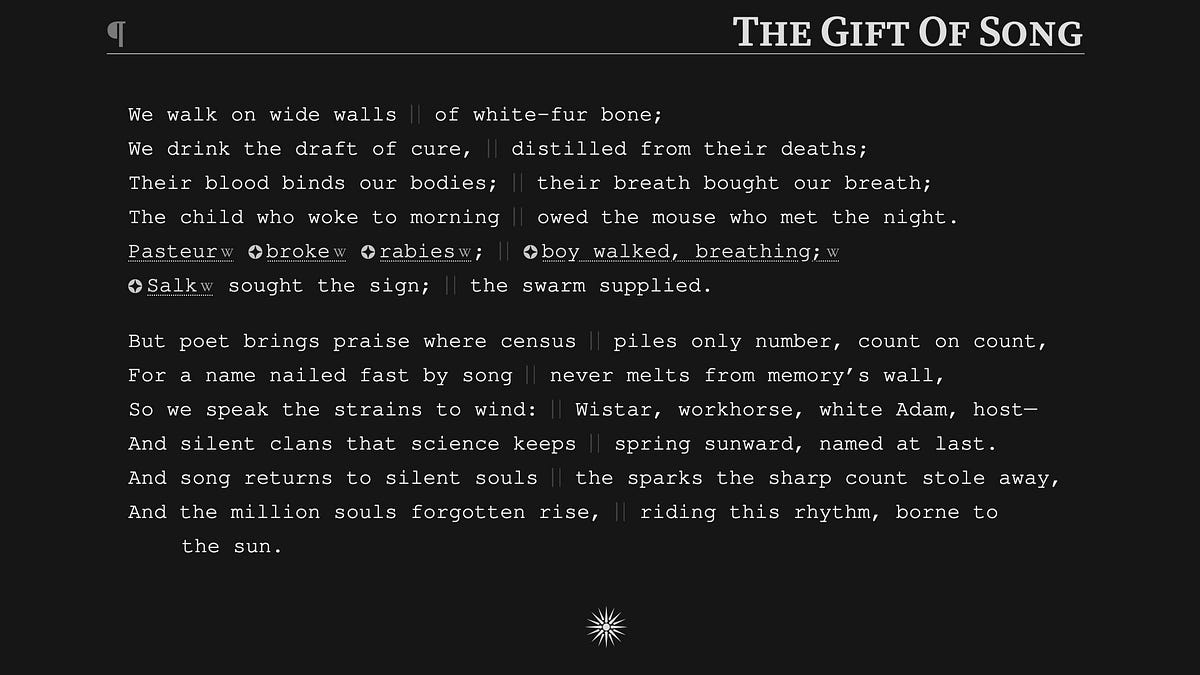

- Gwern ran experiments to complete William Empson’s poem "This Last Pain," to write Pindaric odes, and to produce romantic verse using staged prompting.

- Gwern’s prompting pipeline included style analysis, brainstorming multiple directions, rating and critiquing options, iterative drafting, line-by-line edits, and repeating the cycle.

- Earlier models often ignored instructions or produced non-poetic outputs; models refined with RLHF became more obedient but more generic, a phenomenon Gwern calls "mode collapse."

- Gwern credits creative gains to chain-of-thought (reasoning) models like GPT o1-pro, capability scaling, and rubric-style training exemplified in Moonshot/Kimi K2 work.

- In the Pindaric Ode project Gwern’s models assembled a databank of proper nouns, categories, and ritual terms to anchor poems to the theme of laboratory animals.

- Robbins reports Gwern’s final outputs as "very very good" and "occasionally great," though she senses something still missing.

- Mercor’s founder Brendan Foody told Tyler Cowen the company hires top poets to create evals and behavior examples and said they can pay about $150 an hour for that work.

What to watch next

- Whether follow-up work demonstrates that iterative, multi-model prompting consistently produces poems critics judge as culturally resonant and "great" (not confirmed in the source).

- How Mercor and similar firms scale poet-driven evaluation work and whether that changes model outputs at large user scale (not confirmed in the source).

- If and how communities of readers and poets treat AI-generated work over time — will cultural embedding emerge around machine-authored poems? (not confirmed in the source)

Quick glossary

- Large Language Model (LLM): A machine learning model trained on large amounts of text to generate or transform language-based outputs.

- Reinforcement Learning from Human Feedback (RLHF): A training approach where human ratings guide model behavior, often used to align outputs with human preferences.

- Chain-of-Thought: A model capability or prompting technique that elicits intermediate reasoning steps to improve complex outputs.

- Mode collapse: A tendency for models to produce safe, generic outputs rather than diverse or risky creative responses.

- Pindaric ode: A formal poetic mode with triadic structure historically associated with celebratory and public lyrics.

Reader FAQ

Can LLMs already write great poetry?

Robbins reports strong technical skill and some "occasionally great" outputs in Gwern’s experiments, but she remains skeptical that models achieve the cultural embedding she associates with greatness.

What techniques did Gwern use to get better results?

He used multi-stage prompting: analyze the original, brainstorm many directions, critique and rate options, draft and line-edit iteratively, and employ different models for distinct tasks.

Why is Mercor hiring poets?

According to Mercor’s founder quoted in the source, poets create evaluation data and exemplars that teach models how to behave in poetic tasks; he cited pay of about $150 an hour for top talent.

Are models trained on existing poetry?

The source notes LLMs have most digitized poetry in their training data and therefore tend to draw on existing patterns, but it also emphasizes that being trained on texts is not the same as possessing a culture.

Discover more from Anecdotal Value Observations informed by having paid attention for a long time. Over 3,000 subscribers Subscribe By subscribing, I agree to Substack's Terms of Use, and acknowledge…

Sources

- LLM poetry and the "greatness" question: Experiments by Gwern and Mercor

- LLM poetry and the "greatness" question – Hollis Robbins

- LLM poetry and the "greatness" question

- Post by @mariaa.bsky.social — Bluesky

Related posts

- Google and Character.AI Negotiate Major Settlements in Teen Chatbot Death Cases

- Character.AI and Google Reach Settlements in Teen Suicide, Self-Harm Lawsuits

- Pentagon’s New Influencer Press Corps Fails First Big Test in Venezuela