TL;DR

OpenAI plans to deploy Cerebras' wafer-scale CS-3 accelerators across datacenters through 2028 in a cloud service arrangement sources value at over $10 billion. The move aims to speed up inference using Cerebras' SRAM-heavy architecture, though SRAM capacity and chip power draw create trade-offs.

What happened

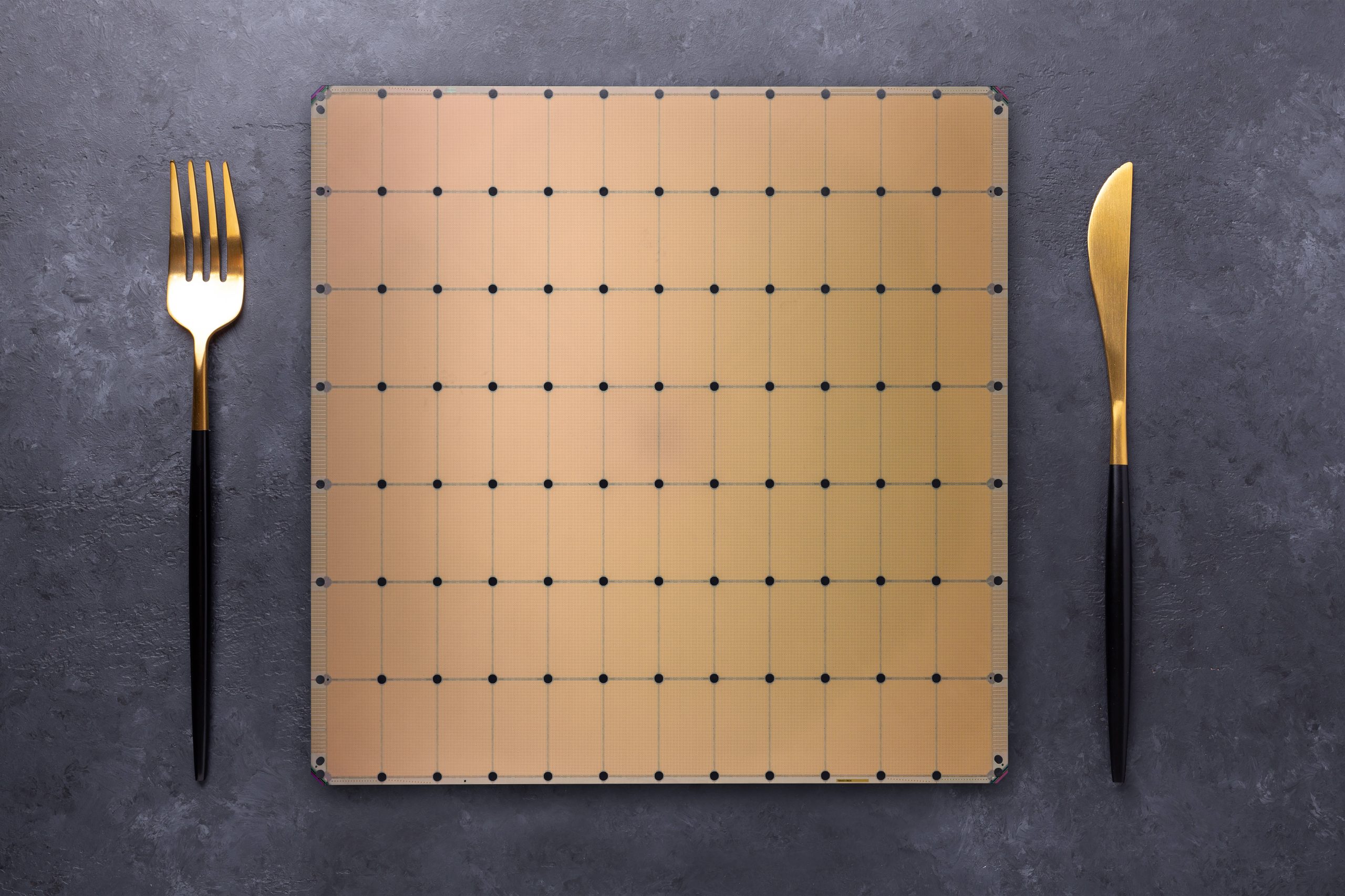

OpenAI announced it will deploy Cerebras' wafer-scale accelerators as part of its inference infrastructure, committing up to 750 megawatts of Cerebras kit through 2028. Sources cited by The Register value the cloud service agreement, in which Cerebras will build and lease datacenters to serve OpenAI, at more than $10 billion. Cerebras' WSE-3 chips measure roughly 46,225 mm2 and include 44 GB of on-chip SRAM. That SRAM gives the accelerators extremely high memory bandwidth compared with modern GPU HBM, a difference the article frames as a major advantage for token-generation speed and real-time interactivity. The piece also highlights limits: SRAM is space-inefficient, requiring model parallelism for larger networks; each chip is rated at about 23 kW; and, depending on precision, model parameter counts map onto SRAM consumption such that even some mid-size models need multiple CS-3s. OpenAI's model router (introduced with GPT-5) and possible disaggregated inference architectures are mentioned as ways to mitigate those constraints.

Why it matters

- Much higher memory bandwidth on Cerebras chips could reduce latency for token generation, improving interactivity for chat, code generation, and agent loops.

- Deploying alternate accelerator architectures signals continued competition in inference hardware beyond Nvidia GPUs.

- The deal structure, with Cerebras taking on datacenter buildout risk, could change cost and operational dynamics for large-scale model serving.

- Memory and power trade-offs mean the shift may favor faster, smaller-model handling for most queries while reserving large models for special cases.

Key facts

- OpenAI will deploy up to 750 megawatts of Cerebras accelerators through 2028, according to OpenAI and reporting.

- Sources cited by The Register put the deal value at more than $10 billion.

- Cerebras' WSE-3 accelerators are about 46,225 mm2 and carry 44 GB of on-chip SRAM.

- Reported memory bandwidth for Cerebras chips is around 21 PB/s, compared with roughly 22 TB/s for an Nvidia Rubin GPU as cited in the article.

- An example performance comparison cited: running gpt-oss 120B, Cerebras chips achieved ~3,098 tokens/sec single-user vs ~885 tok/sec for a Together AI setup using Nvidia GPUs.

- SRAM capacity is limited relative to its physical footprint; the article notes the chips pack about as much memory as a six-year-old Nvidia A100 PCIe card.

- Each CS-3 accelerator is rated at approximately 23 kW; at 16-bit precision the article states 1 billion parameters consumes about 2 GB of SRAM, so some models require multiple accelerators (e.g., Llama 3 70B needed at least four CS-3s).

- Cerebras has shifted focus from training to inference since its last wafer-scale launch; OpenAI uses a model router introduced with GPT-5 to route most requests to smaller models.

What to watch next

- Whether Cerebras will place supporting GPUs in its leased datacenters to enable disaggregated inference architectures — not confirmed in the source.

- Any public disclosures of deployment timing, utilization rates, or cost terms from OpenAI or Cerebras beyond the 'through 2028' horizon.

- Whether Cerebras' next silicon increases SRAM area or adds modern block floating-point formats (e.g., MXFP4) to serve larger models on a single chip — not confirmed in the source.

Quick glossary

- SRAM: Static RAM: a type of fast on-chip memory that provides low-latency access but generally has lower density and higher area cost than stacked DRAM like HBM.

- HBM: High Bandwidth Memory: stacked DRAM used on many modern GPUs to provide high memory capacity and bandwidth, typically outside a chip's logic die.

- Wafer-scale accelerator: An integrated circuit approach that builds a single very large chip across a significant portion of a silicon wafer to provide massive compute and on-chip memory.

- Inference: The phase where a trained model processes inputs and produces outputs, as opposed to the training phase where the model's weights are learned.

- Disaggregated inference: An architecture that splits different stages of model serving across heterogeneous hardware—for example, using GPUs for preprocessing and other accelerators for token generation.

Reader FAQ

Is the $10B valuation confirmed?

Sources speaking to The Register value the arrangement at more than $10 billion; the article frames this as reported sourcing.

Will Cerebras build and operate datacenters for OpenAI?

The reporting says Cerebras will take on the risk of building and leasing datacenters to serve OpenAI under a cloud service agreement.

Do Cerebras chips make OpenAI replace GPUs entirely?

Not confirmed in the source; the article discusses possible mixed or disaggregated deployments and notes it would depend on Cerebras and deployment choices.

How do Cerebras accelerators compare on token generation speed?

The article cites an example where Cerebras hardware achieved about 3,098 tokens/sec on a 120B model versus 885 tok/sec for a rival using Nvidia GPUs in that comparison.

SYSTEMS OpenAI to serve up ChatGPT on Cerebras’ AI dinner plates in $10B+ deal SRAM-heavy compute architecture promises real-time agents, extended reasoning capabilities to bolster Altman's valuation Tobias Mann Thu 15 Jan 2026…

Sources

- OpenAI to serve up ChatGPT on Cerebras’ AI dinner plates in $10B+ deal

- Strategic Intelligence for AI Engineers & Leaders | AI Tech TL …

- Top 15 Most Valuable AI Startups in 2026: Valuations … – Jarsy

- AI Hub — Real-Time AI Intelligence

Related posts

- OpenAI inks multi-year deal for 750 MW compute from Cerebras worth $10B

- How Online Conspiracies and Influencers Shape Policy in Trump’s 2nd Term

- Google Pixel’s comeback: strong 2025 growth and renewed momentum