TL;DR

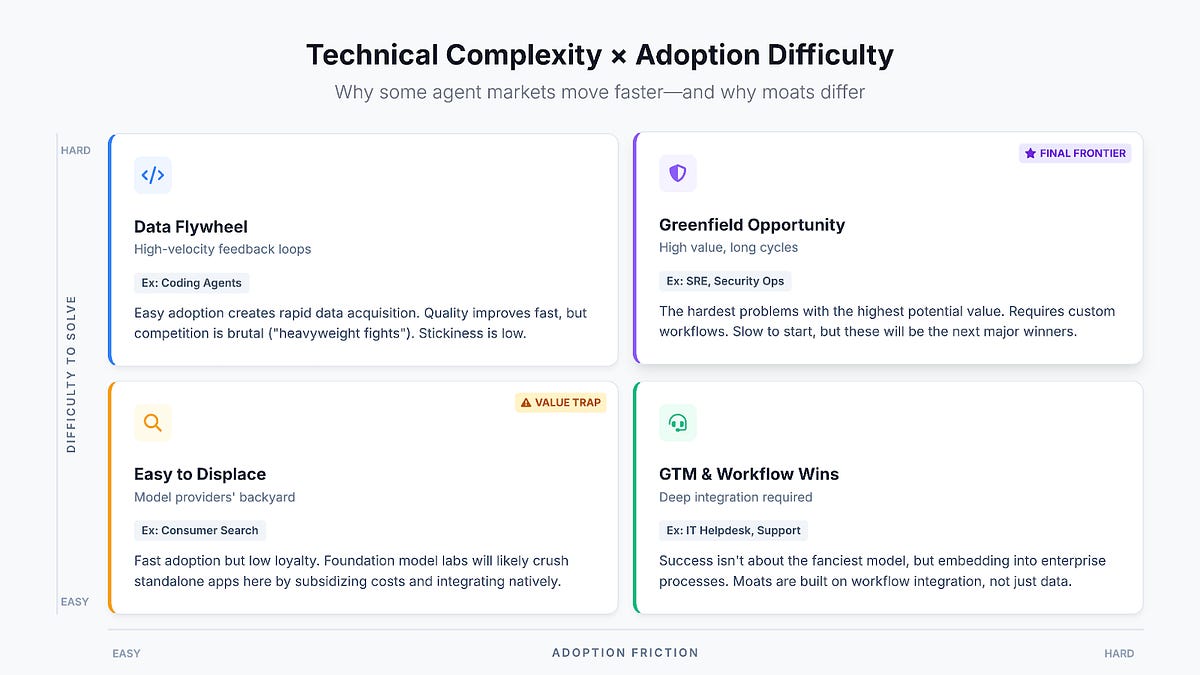

The authors argue that data—not model architecture—is the primary competitive barrier for AI applications, and that the way products are adopted determines how effectively they collect that data. They map AI use cases into a four-quadrant framework where adoption ease and technical difficulty shape which businesses build durable advantages.

What happened

In a recent piece, Vikram Sreekanti and Joseph E. Gonzalez lay out a framework linking product adoption patterns to the formation of a "data moat." They divide AI applications into four quadrants based on how hard they are to adopt and how hard they are to solve: easy/easy, easy/hard, hard/easy, and hard/hard. Using examples such as coding agents and enterprise support bots, the authors show that easy adoption creates feedback loops that accelerate model improvement — exemplified by Cursor’s rapid iteration in developer tooling — while enterprise integrations produce customer-specific data that makes replacements difficult. They note that foundation model providers are likely to dominate obvious, high-volume consumer use cases, whereas the most valuable and defensible opportunities may lie in complex, enterprise-specific workflows despite slower improvement cycles. The piece also considers how adoption mechanics affect competition, stickiness, and where startups might focus.

Why it matters

- Data acquisition patterns determine whether a product can improve rapidly or become entrenched inside customers.

- Low barriers to adoption accelerate model training but also leave products vulnerable to displacement by major model providers.

- Enterprise integrations can create customer-specific expertise that is difficult for newcomers to replicate.

- Hard-to-solve, hard-to-adopt problems may yield the most durable value but require longer timelines and deeper investment.

Key facts

- The authors propose a 2×2 framework of adoption difficulty versus problem difficulty to analyze AI product dynamics.

- Coding agents improved quickly because adoption was easy (developers could switch tools with little friction) and usage provided fast, granular feedback.

- Cursor is cited as an example where easy adoption produced a data flywheel that accelerated product quality for code generation.

- Foundation model providers (named: OpenAI, Google, Anthropic) and newcomers (Perplexity, You.com) focus on high-volume, easy-to-adopt consumer use cases.

- Enterprise applications (hard-to-adopt, easy-to-solve) gain stickiness through integration work and knowledge of customer processes, creating a different kind of data moat.

- Hard-to-adopt, hard-to-solve areas like SRE and security operations have received relatively less attention but could offer high long-term value.

- The authors observe that loyalty is generally low in easy-to-adopt categories; users commonly try multiple agents for different tasks.

- They caution that smaller players in widely used productivity categories may struggle against well-funded model providers unless they invest heavily.

What to watch next

- Whether frontier model labs will expand beyond document editors into broader office-suite productivity tools, as the authors expect.

- Emergence of interoperability standards or migration mechanisms that would reduce vendor lock-in for coding and productivity agents.

- not confirmed in the source

Quick glossary

- Data moat: A competitive advantage formed when a company’s access to unique or hard-to-replicate data makes its product difficult for others to match.

- Adoption model: The pattern by which users or organizations start using a product, including whether it is an individual choice or requires organizational buy-in.

- Data flywheel: A virtuous cycle where product usage generates data that improves the product, which in turn drives more usage and more data.

- Foundation model provider: A company that trains and serves large pre-trained models which other applications can build on or integrate with.

Reader FAQ

What do the authors mean by 'data is your only moat'?

They mean that access to ongoing, useful data is the central source of competitive advantage for AI products, shaping both product improvement and stickiness.

Why did coding agents improve faster than other applications?

Because developer tools were easy to adopt and generated many fine-grained feedback signals (accepted/rejected suggestions), creating a rapid data flywheel.

Will major model providers win every category?

The piece suggests model providers are advantaged in high-volume, easy-to-adopt areas, but enterprise integrations and hard technical problems can produce different, durable moats.

Are there specific companies that have already secured these data moats?

The authors mention Cursor, OpenAI, Google, Anthropic, Perplexity, You.com, Sierra, and Decagon as examples in different contexts, but detailed market positions are not fully analyzed.

Discover more from The AI Frontier Lessons from building an AI product at RunLLM and the latest AI research at Cal. Over 4,000 subscribers Subscribe By subscribing, I agree to…

Sources

- Data is the only moat

- Are Data Moats Dead in the Age of AI?

- Building AI Features Around Data, Not Just Functionality

- Is Proprietary Data Still a Moat in the AI Race?

Related posts

- How I Learned Programming: Why You Don’t Need LLMs to Learn Code

- Paper and code show Hessian inversion for tall‑skinny neural nets

- Single‑bit flip in AMD CPUs allows VM breach via SEV‑SNP stack engine