TL;DR

This report summarizes a primer on how modern digital cameras form images, starting from light detection on a sensor and extending to basic optics like the pinhole camera. It explains color capture via filter arrays, the role of exposure time, and how simple aperture geometry determines field of view and vignetting.

What happened

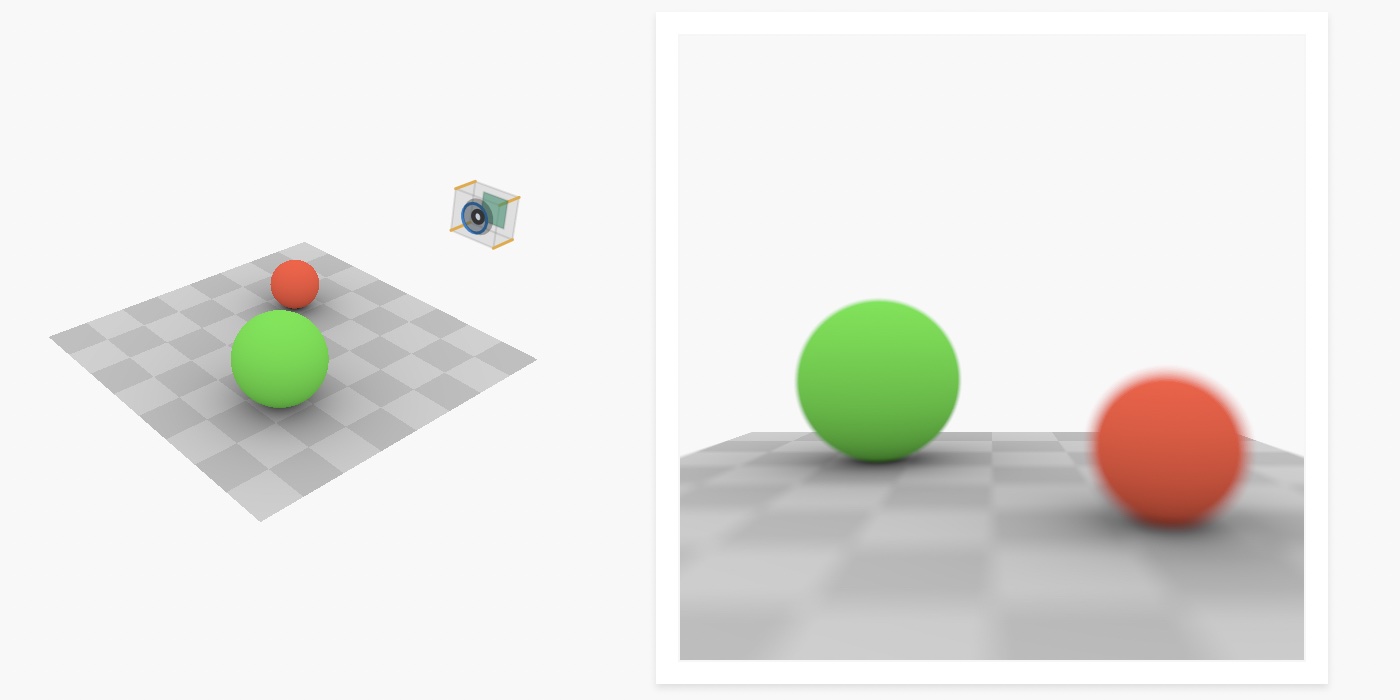

The source walks through a first-principles construction of a simple camera and uses interactive demonstrations to show how photons become pictures. It starts by describing the image sensor as a grid of photodetectors that convert incoming photons into electrical signals; those signals set pixel brightness. Because individual detectors measure only intensity, a color filter array—commonly a Bayer pattern with two greens, one red and one blue per 2×2 block—is used to sample color, and demosaicing algorithms interpolate full RGB values. The piece then shows that an unshielded sensor receives light from all directions, producing an unintelligible result, and so places the sensor in an enclosure with a small hole to form a pinhole camera. The pinhole produces an inverted image, a field of view determined by hole-to-sensor distance, and natural vignetting explained by the cosine-fourth-power law.

Why it matters

- Understanding sensor basics clarifies why exposure, color filters and post-processing affect final image quality.

- The geometry of the aperture and sensor controls field of view and apparent subject size in a photograph.

- Vignetting is a predictable optical consequence of simple camera geometry and affects corner brightness.

- Explaining demosaicing highlights that color reconstruction is computational and not directly measured by each photosite.

Key facts

- Historical analog photography used film coated with silver halide crystals that darken when exposed to light.

- Most contemporary cameras are digital and rely on image sensors made of a grid of photodetectors.

- A photodetector converts photons into electrical current; more photons produce a stronger signal and brighter pixels.

- Individual photosites measure intensity only; color capture requires a color filter array placed over detectors.

- The Bayer filter is a common arrangement with two green, one red and one blue filter per 2×2 cell because green correlates strongly with perceived brightness.

- Demosaicing reconstructs full-color pixels by interpolating values from neighboring filtered detectors.

- Shutter speed or exposure time controls how long photons are collected and therefore image brightness.

- An unshielded sensor integrates light from all directions, so a pinhole or aperture is needed to restrict ray directions and form a coherent image.

- A pinhole camera produces an inverted image; changing the distance between the hole and sensor narrows the field of view, and corners darken due to the cosine-fourth-power law (combined distance and angular effects).

What to watch next

- How different demosaicing algorithms change final image artifacts and sharpness — not confirmed in the source

- Replacement of pinhole models with real lenses and their influence on aberrations and sharpness — not confirmed in the source

- Quantitative trade-offs between aperture size, diffraction and exposure in lens-based designs — not confirmed in the source

Quick glossary

- Photodetector: A sensor element that converts incident photons into an electrical signal proportional to light intensity.

- Bayer filter: A color filter array pattern that places red, green and blue filters over photosites; typically uses two greens per 2×2 block.

- Demosaicing: The process of reconstructing full-color pixels from the partial color samples produced by a color filter array.

- Shutter speed (exposure time): The duration during which the sensor collects photons; it influences image brightness and motion capture.

- Vignetting: A reduction in image brightness at the frame periphery, often caused by geometric and angular effects in the optical path.

Reader FAQ

How do digital cameras capture color if photosites only measure intensity?

They use a color filter array (for example a Bayer pattern) over the photosites and then reconstruct full RGB values through demosaicing.

What controls how bright an image appears?

The amount of time the sensor collects photons (shutter speed/exposure time) directly affects image brightness.

Why is a pinhole camera image inverted?

Rays from the scene cross at the aperture so the formed projection on the sensor is rotated 180 degrees.

What causes dark corners in pinhole photographs?

Natural vignetting described by the cosine-fourth-power law: combined distance and angular factors reduce illumination toward the corners.

Bartosz Ciechanowski Blog Archives Patreon X / Twitter Instagram e-mail RSS December 7, 2020 Cameras and Lenses Pictures have always been a meaningful part of the human experience. From the…

Sources

- Cameras and Lenses

- The Pinhole Camera Model

- How Does a Camera Obscura Device Work?

- Introduction to Pinhole Photography on a DSLR

Related posts

- Python Performance and Memory: Numbers Every Programmer Should Know

- Public domain 2026: Betty Boop, Pluto, and Nancy Drew set free

- Build a Simple Deep Learning Library from Scratch with NumPy and Autograd