TL;DR

The source walks through camera basics from photon capture on a digital sensor to color reconstruction and simple optics. It demonstrates how exposure, color filters, demosaicing and a pinhole enclosure together produce a usable photograph and explains why effects like image inversion and vignetting occur.

What happened

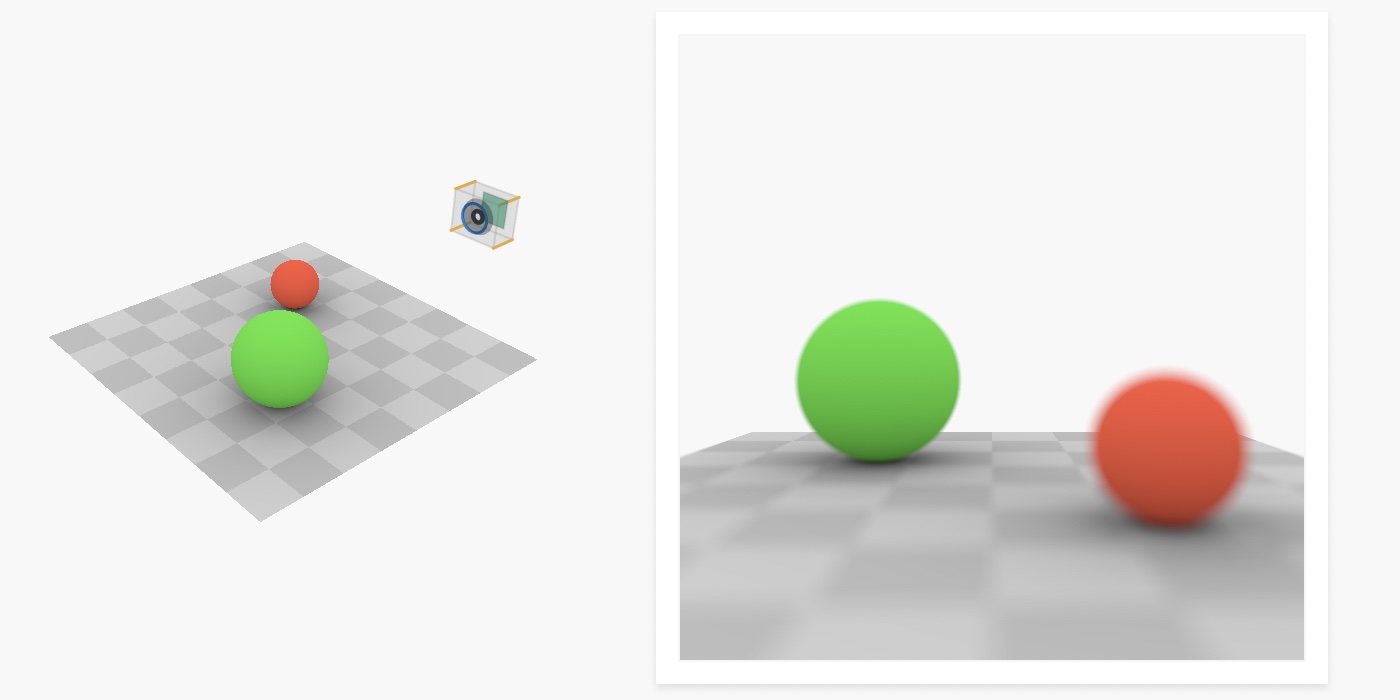

The author builds a simple camera model from first principles to show how digital images are formed. A sensor is presented as a grid of photodetectors that convert incoming photons into electrical signals; alone they record only intensity, producing monochrome data. Color is captured by placing tiny red, green and blue filters over pixels — the common Bayer arrangement uses two greens per 2×2 block because green correlates strongly with perceived brightness. Demosaicing algorithms then interpolate missing color values to reconstruct a full-color image. The demonstration then encloses the sensor in a box with a small opening to form a pinhole camera: rays crossing the aperture invert the scene and the hole-to-sensor distance controls field of view. The account concludes by describing corner darkening (vignetting) explained by a cos^4(α) drop in illumination from geometric and angular factors.

Why it matters

- Explains the fundamental chain from photons to pixels, clarifying what sensors actually measure.

- Shows why color capture requires filters and interpolation rather than direct color sensing by pixels.

- Connects simple optical geometry to practical image properties like field of view and image inversion.

- Identifies geometric causes of vignetting, a common artifact in photography and lens design.

Key facts

- Digital image sensors are grids of photodetectors that convert photons into electric current.

- Photodetectors measure total light intensity and do not directly register color.

- Color filters (for example a Bayer filter) place red, green and blue filters over pixels; the Bayer pattern uses two greens per 2×2 block.

- Demosaicing reconstructs full-color pixels by filling in missing color components, often via interpolation.

- Shutter speed (exposure time) controls how long photons are collected and therefore image brightness.

- A pinhole camera inverts the scene because light rays cross at the aperture.

- Moving the aperture farther from the sensor narrows the field of view and changes object apparent size.

- Corner darkening follows a cosine-fourth-power relationship (cos^4(α)), driven by distance and angular factors.

What to watch next

- Further developments and variety in demosaicing algorithms and alternative color filter arrays (discussed but not detailed in the source).

- How lens and aperture design are used in practice to control vignetting and field of view — not confirmed in the source.

- The influence of exposure choices on motion blur and image noise — not confirmed in the source.

Quick glossary

- Photodetector: A sensor element that converts incoming photons into an electrical signal proportional to light intensity.

- Bayer filter: A common color filter array that arranges red, green and blue filters over sensor pixels, typically with two greens per 2×2 block.

- Demosaicing: A computational process that reconstructs full-color image pixels by estimating missing color components from neighboring filtered pixels.

- Shutter speed (exposure time): The duration during which a sensor collects light; longer times increase brightness, shorter times reduce it.

- Vignetting: Darkening toward the edges of an image caused by geometric and angular reductions in light reaching the sensor.

Reader FAQ

Do camera sensors record color directly?

No. Individual photodetectors measure intensity; color is obtained by placing color filters over pixels and reconstructing missing channels via demosaicing.

Why does a pinhole camera produce an inverted image?

Rays from the scene cross at the aperture, so top/bottom and left/right are swapped on the sensor.

What causes vignetting in the corners of photos?

A combination of increased distance, the apparent shape and tilt of the aperture at angles, and receptor orientation reduce illumination following a cos^4(α) relationship.

Are analog film processes discussed in detail?

Not in detail. The source notes historical use of silver halide on film but focuses on digital sensors.

Bartosz Ciechanowski Blog Archives Patreon X / Twitter Instagram e-mail RSS December 7, 2020 Cameras and Lenses Pictures have always been a meaningful part of the human experience. From the…

Sources

- Cameras and Lenses (2020)

- What's the difference between vignetting and pinhole?

- Pinholes and image circle/vignetting

- Understanding Lens Vignetting

Related posts

- Survey of 18 Memory Subsystem Optimization Posts and Techniques

- Autism diagnoses rose as criteria broadened, not due to higher severe prevalence

- How Cameras and Lenses Capture Light: From Sensors to Pinhole