TL;DR

A Director of Engineering at Mon Ami described adding an AI agent to a seven-year Ruby on Rails monolith using the RubyLLM gem, Algolia search, and Pundit policies. The implementation exposes a controlled function-call tool that runs Algolia queries and then applies Pundit scopes so the language model only sees permitted client data.

What happened

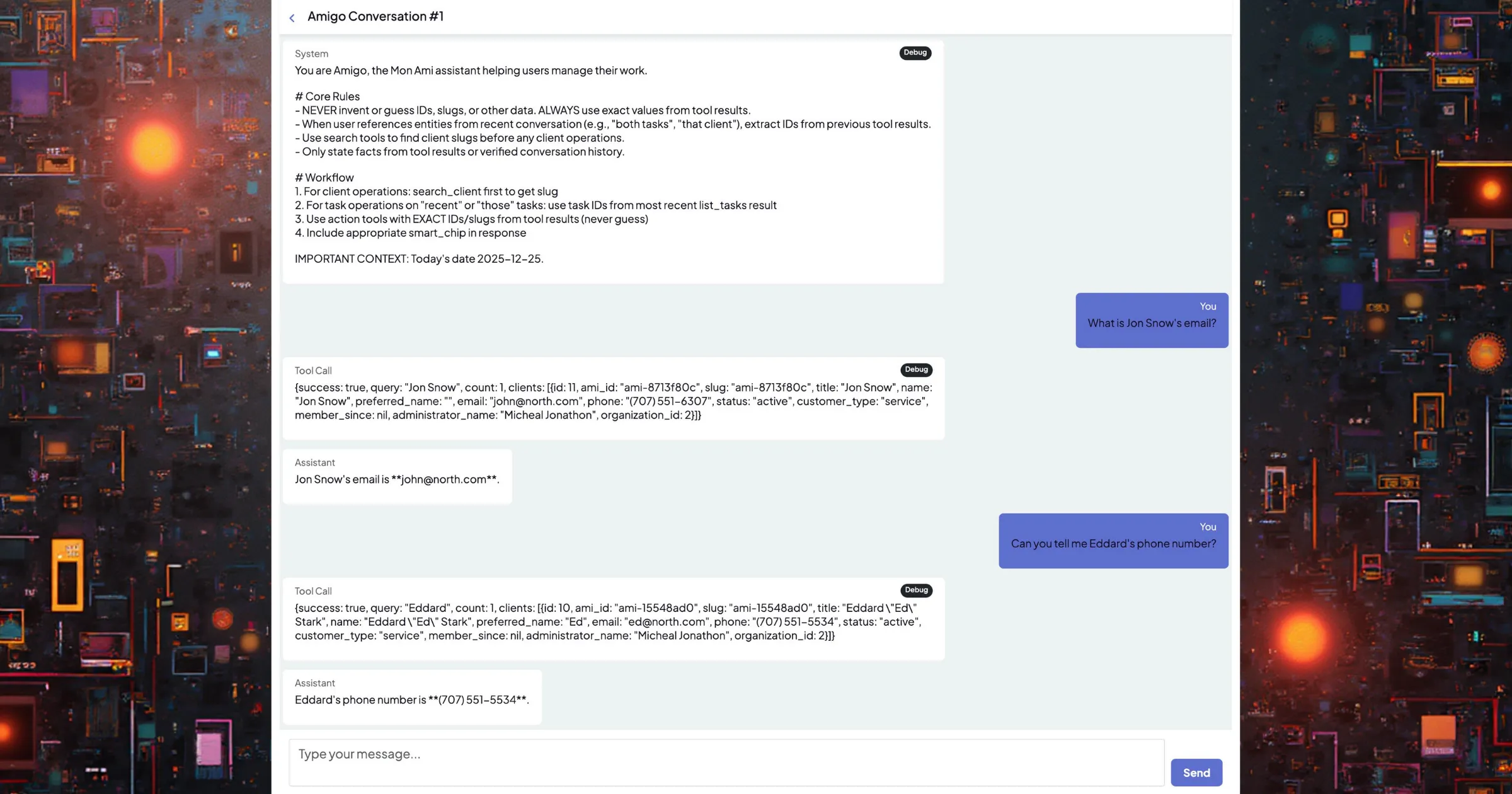

Mon Ami, a US startup running a seven-year Ruby on Rails multi-tenant application for aging and disability case workers, added an in-app AI agent without loosening access controls or creating a parallel system. The engineer used the RubyLLM gem to host Conversations and define function-call style tools; the primary tool implemented searches against an existing Algolia index. The tool runs an Algolia search, extracts hit IDs, converts those into an ActiveRecord scope, and then resolves visibility with the app’s Pundit policy before returning a small JSON result. The chat surface is a Turbo/Stimulus-driven UI that enqueues an Active Job to process messages; Conversation instances broadcast updates when responses arrive. The author tested multiple models (gpt-5, gpt-4o, gpt-4) and found gpt-4o offered the best balance of latency and reliability for their flows. Future evaluation of Anthropic and Google’s Gemini was noted.

Why it matters

- Demonstrates a pattern to add LLM functionality while preserving existing authorization rules in a legacy monolith.

- Shows how function-call tools can limit model access to only the data returned by controlled APIs.

- Reuses existing infrastructure (Algolia for search, Pundit for policy) to avoid introducing a parallel data access surface.

- Illustrates practical model trade-offs (context size, speed, hallucination tendency) that affect developer experience.

Key facts

- The project was implemented in an existing multi-tenant Rails monolith serving sensitive client data.

- RubyLLM was used to manage Conversations, Messages, and tool function calls.

- Tools were loaded from app/tools/**/*.rb and exposed to Conversations via a with_tools API.

- The search tool queries Algolia, collects hit IDs, then applies a Pundit policy scope before returning client fields.

- RubyLLM configuration shown included request_timeout = 600 seconds and max_retries = 3.

- The UI is a Turbo Streams form that enqueues ProcessMessageJob; the job calls conversation.ask to process input.

- Model experiments included gpt-5 (large context but slower for multi-tool flows), gpt-4 (prone to hallucinations), and gpt-4o (favored balance).

- Building the first agent took roughly two to three days, with development aided by Claude.

What to watch next

- Evaluation of Anthropic models for performance and latency (confirmed in the source).

- Testing and comparison of Google’s Gemini model (confirmed in the source).

- How this approach behaves under real production load and edge cases (not confirmed in the source).

- User acceptance and privacy audit outcomes before broader rollout (not confirmed in the source).

Quick glossary

- Ruby on Rails: A web application framework in Ruby that emphasizes convention over configuration and rapid development.

- Multi-tenant: An architecture where a single application instance serves multiple customer organizations, isolating each tenant’s data and configuration.

- Pundit: A Ruby library for handling authorization logic via policies and scopes.

- Algolia: A hosted search service that provides fast, indexed search capabilities for application data.

- Function-call tools: A pattern where an LLM can decide to call predefined functions (tools) and receive structured results to augment its responses.

Reader FAQ

Does the LLM get unrestricted access to client records?

No — the implementation routes searches through a tool that runs an Algolia query then applies the app’s Pundit policy before returning limited fields.

How long did it take to build the first agent?

The author states the initial tool took about two to three days to build, with AI-assisted development.

Which models were tested and which was preferred?

The team tested gpt-5, gpt-4o, and gpt-4; gpt-4o was preferred for its balance of speed and correctness.

Has this agent been fully deployed to production?

not confirmed in the source

Building an AI agent inside a 7-year old Rails application Dec 26, 2025 6 min read ruby-on-rails rubyllm We run a multi-tenant Rails application with sensitive data and layered authorization….

Sources

- Building an AI agent inside a 7-year-old Rails monolith

- Rails Integration

- Active Agent | The AI Framework For Ruby on Rails

- Building an AI Agent with Ruby and Rails from Scratch

Related posts

- SQLite AI unifies on-device intelligence with cloud-scale services

- Rob Pike Strongly Criticizes Generative AI in Public Remarks

- TurboDiffusion Delivers 100–200× Speedup for Video Diffusion Models