TL;DR

MIT researchers combined modern learning-based vision models with classical geometry to let robots build large-scale 3D maps by creating and aligning many small submaps. The system runs in near real time, doesn't require calibrated cameras, and produced reconstructions with average errors under 5 cm in tests.

What happened

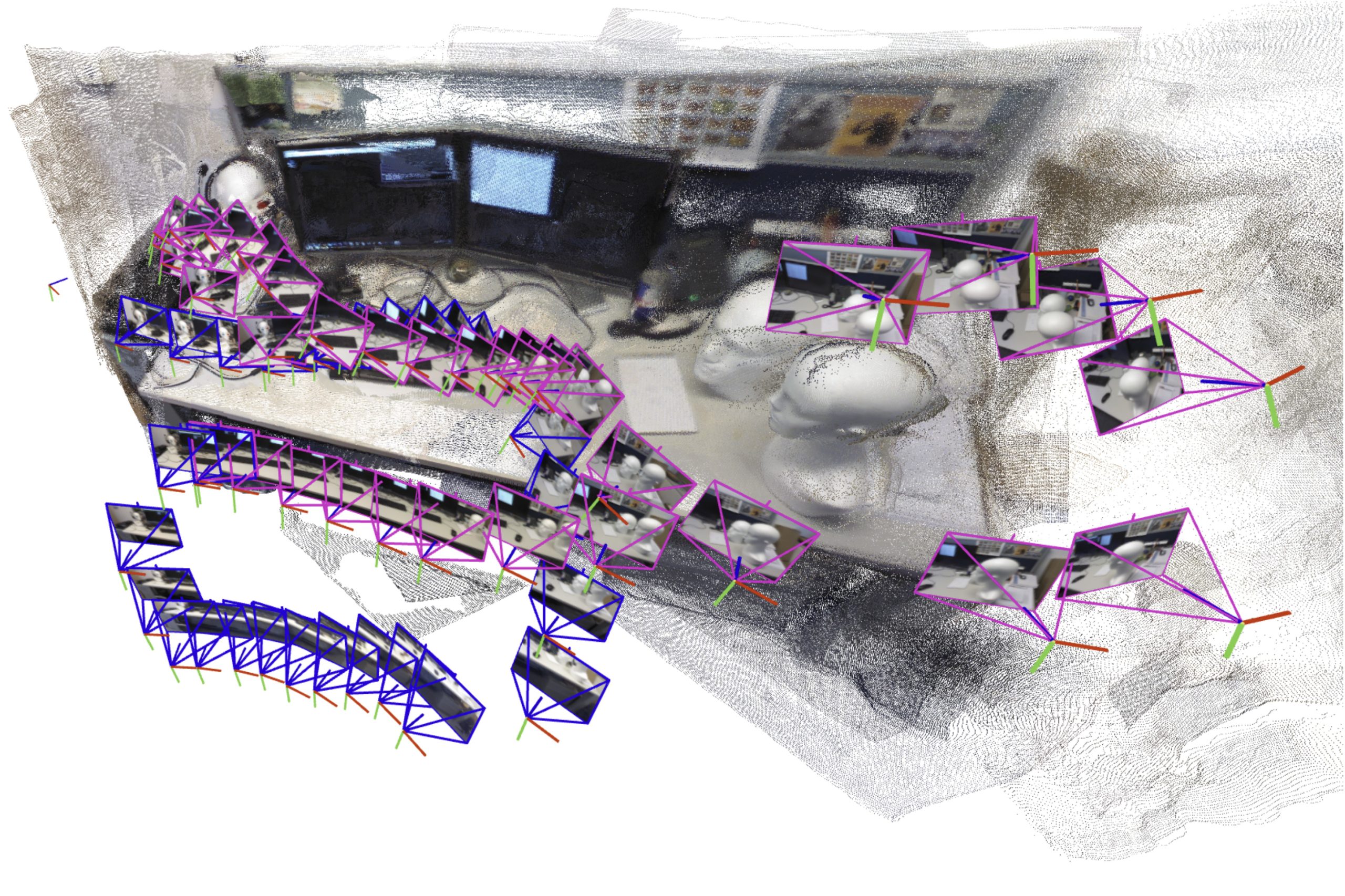

A team at MIT developed a new SLAM-like system that lets a robot reconstruct large, complex 3D scenes by generating and stitching together smaller submaps. Instead of trying to feed thousands of images to a single learned model, the approach produces many local dense submaps from short image batches, then aligns them with a more flexible mathematical transformation that accounts for deformation and ambiguity introduced by learning-based modules. The pipeline outputs a full 3D reconstruction and camera-location estimates in near real time. In experiments the researchers produced detailed reconstructions of indoor scenes (including the MIT Chapel and corridor-like settings) from short cellphone videos, achieving average reconstruction errors below 5 centimeters. The method requires no pre-calibrated cameras or expert tuning. The paper, led by graduate student Dominic Maggio with Hyungtae Lim and Luca Carlone, will be presented at NeurIPS.

Why it matters

- Enables faster mapping over large areas by composing many small maps rather than processing thousands of images at once.

- Reduces operational complexity because it does not require calibrated cameras or extensive system tuning.

- Could improve responsiveness of search-and-rescue robots operating in hazardous, time-sensitive environments.

- May be applied to consumer and industrial scenarios such as wearable XR devices and warehouse robotics.

Key facts

- Problem addressed: simultaneous localization and mapping (SLAM) for large, unstructured environments.

- Prior learned models could typically process only about 60 images at a time, limiting scale.

- New approach builds incremental dense submaps and stitches them using transformations that model submap deformations.

- System produces both a 3D reconstruction and camera-location estimates in close to real time.

- Test reconstructions included indoor scenes captured with short cellphone videos; average error was under 5 centimeters.

- The technique does not require camera calibration or hand-tuned implementations.

- Authors: Dominic Maggio (lead author), Hyungtae Lim, and Luca Carlone (senior author).

- Paper title referenced: 'VGGT-SLAM: Dense RGB SLAM Optimized on the SL(4) Manifold'.

- Work will be presented at the Conference on Neural Information Processing Systems (NeurIPS).

- Funding support cited from the U.S. National Science Foundation, U.S. Office of Naval Research, and the National Research Foundation of Korea.

What to watch next

- Efforts to deploy the method on real robots operating in particularly complex or hazardous environments — researchers say this is a next step.

- Improvements aimed at increasing reliability for especially complicated scenes.

- Potential uptake in XR wearables and warehouse robotics if real-world robustness matches experimental results.

Quick glossary

- SLAM: Simultaneous Localization and Mapping: the process by which a device builds a map of an unknown environment while determining its pose within that map.

- Submap: A localized, typically smaller portion of a larger map that represents part of the environment and can be later combined with other submaps.

- 3D reconstruction: The creation of a three-dimensional model of a scene or object from sensor data such as images or depth measurements.

- Camera calibration: The process of determining a camera's internal parameters (like focal length and lens distortion) needed for accurate metric measurements.

- Manifold (in this context): A mathematical space that can be used to represent transformations and constraints for aligning maps or poses; the paper references optimization on the SL(4) manifold.

Reader FAQ

Does the system require calibrated cameras?

No — the researchers state the method does not need pre-calibrated cameras.

How accurate are the reconstructions?

In reported tests the average reconstruction error was under 5 centimeters.

Was this tested on real robots in the field?

Not confirmed in the source; experiments used short videos (including cellphone captures) and researchers said they plan to implement the method on real robots in the future.

Where was the work published or presented?

The team will present the work at the Conference on Neural Information Processing Systems (NeurIPS).

Who supported the research?

Support came from the U.S. National Science Foundation, U.S. Office of Naval Research, and the National Research Foundation of Korea.

A new approach developed at MIT could help a search-and-rescue robot navigate an unpredictable environment by rapidly generating an accurate map of its surroundings. Adam Zewe | MIT News Publication…

Sources

- Teaching robots to map large environments

- Teaching robots to map large environments – IDSS

- AI mapping system builds 3D maps in seconds for rescue …

- MIT's New Tech Helps Robots Map Big Spaces Fast

Related posts

- MIT engineers build hydrogel ‘tendons’ to boost muscle-powered robots

- MIT students study AI, autonomy and robotics for offshore aquaculture in Norway

- MIT develops control framework to keep soft robots within safe force limits