TL;DR

MIT engineers created a workflow that converts spoken prompts into physical objects by combining speech recognition, 3D generative models, voxelization and robotic assembly. A tabletop robotic arm has produced stools, shelves, chairs and decorative items in as little as five minutes using modular building blocks.

What happened

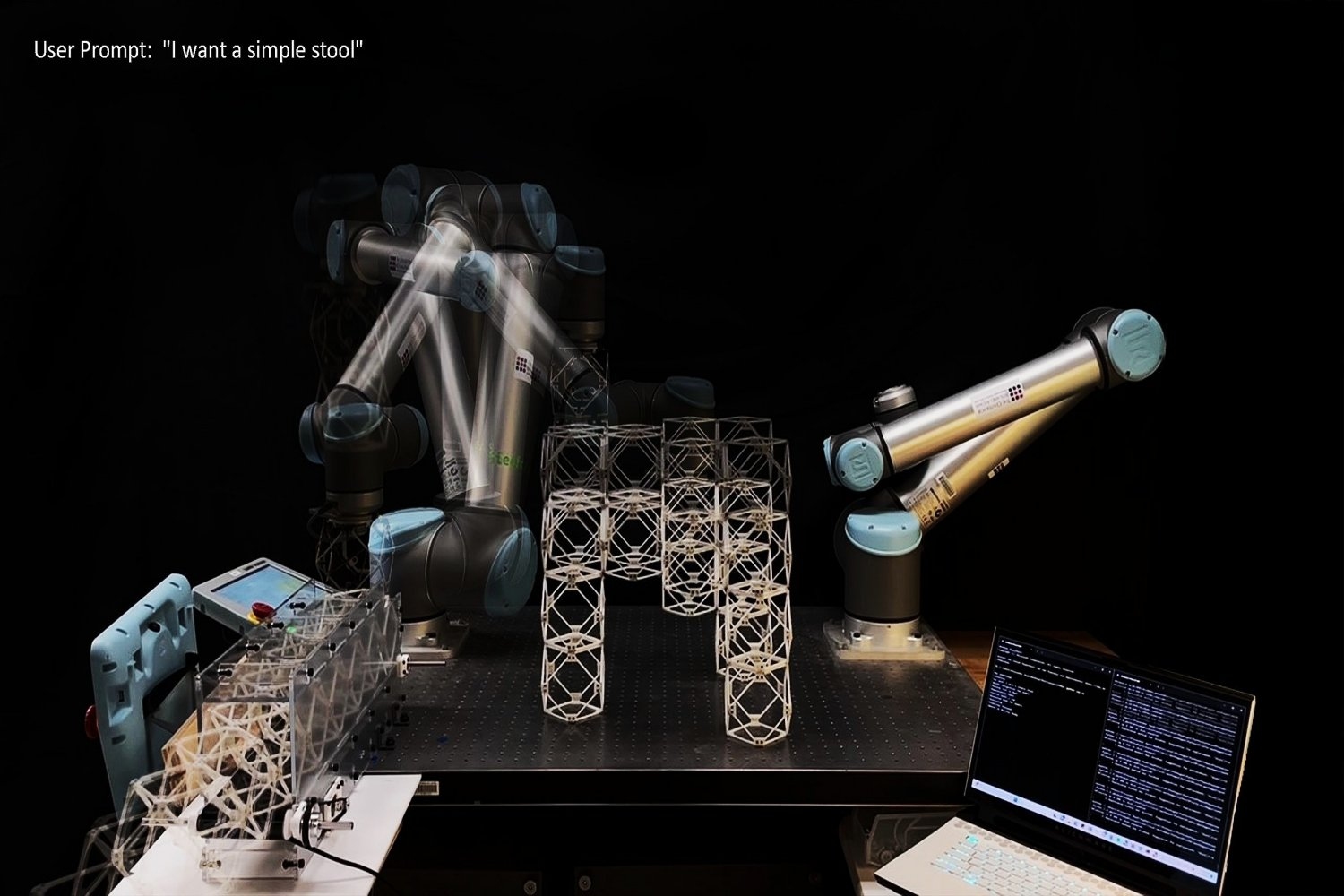

Researchers at MIT’s Morningside Academy for Design and the Center for Bits and Atoms built a prototype “speech-to-reality” pipeline that turns natural-language prompts into assembled physical objects. The process begins with speech recognition processed by a large language model, which informs a 3D generative AI to produce a mesh. That mesh is voxelized into discrete modular components, then geometrically adjusted to account for fabrication constraints such as overhangs, connectivity and component count. The system generates an assembly sequence and computes robotic path planning so a tabletop robotic arm can pick and place parts and assemble items. Team members led by graduate student Alexander Htet Kyaw—along with Se Hwan Jeon and Miana Smith—have demonstrated the system building stools, shelves, chairs, a small table and a decorative dog figure. The project emphasizes fast on-demand fabrication, modular reuse to reduce waste, and ongoing work to strengthen physical connections and expand assembly approaches.

Why it matters

- Makes design and basic manufacturing accessible to people without 3D modeling or robot programming expertise.

- Can produce objects in minutes, offering a speed advantage over many 3D-printing workflows that take hours or days.

- Uses modular components intended for disassembly and reuse, which could reduce material waste over single-use fabrication.

- Combines advances in natural language processing, 3D generative AI and robotics, demonstrating an integrated human–AI–robot fabrication interface.

Key facts

- Pipeline stages: speech recognition (LLM), 3D generative AI mesh creation, voxelization into components, geometric processing, assembly sequencing, and robotic path planning.

- A tabletop robotic arm assembles objects from modular blocks; demonstrated outputs include stools, shelves, chairs, a small table and a dog statue.

- Researchers reported the ability to construct furniture-like items in as little as five minutes.

- Project leads and contributors include Alexander Htet Kyaw, Se Hwan Jeon and Miana Smith; the work was presented at ACM Symposium on Computational Fabrication (SCF ’25) on Nov. 21 at MIT.

- Modular design is intended to allow disassembly and reconfiguration—examples include turning one piece of furniture into another to reduce waste.

- Current assemblies use magnetic connections; the team plans to replace magnets with stronger connectors to improve load-bearing performance.

- The group has developed pipelines to convert voxel structures into assembly sequences for small distributed mobile robots to enable scaling to other size ranges.

- Originated from work Kyaw began in the MIT course “How to Make Almost Anything” and continued at the MIT Center for Bits and Atoms.

What to watch next

- Upgrades to physical connections to improve weight-bearing capacity (team plans to move beyond magnetic joins).

- Integration of gesture recognition and augmented reality controls alongside speech for multimodal interaction.

- Translation of voxel-to-assembly pipelines to small distributed mobile robots to scale the approach to larger structures.

- Wider availability, commercial deployment timelines or regulatory/safety approvals: not confirmed in the source.

Quick glossary

- 3D generative AI: Machine learning models that can produce three-dimensional shapes or meshes from inputs such as text, sketches, or other constraints.

- Voxelization: The process of converting a 3D shape into a grid of discrete cubic elements (voxels) for modular assembly or analysis.

- Large language model (LLM): A neural network trained on extensive text data to understand and generate human-like language, often used for interpreting prompts.

- Robotic path planning: Algorithms that compute feasible movement sequences for a robot arm to pick, place and assemble components without collisions.

- Modular components: Interchangeable building blocks designed to be assembled and disassembled into different configurations.

Reader FAQ

How quickly can the system make an object?

Researchers report prototypes producing furniture-like items in as little as five minutes.

What kinds of items has the system produced?

Demonstrations include stools, shelves, chairs, a small table and a decorative dog figure.

Does the system use 3D printing?

No — the demonstrated workflow assembles preformed modular components rather than fabricating items via layer-by-layer 3D printing.

Is this system available for public or commercial use?

not confirmed in the source

Are the fabricated objects load-bearing and safe to use?

The team plans to improve weight-bearing capability by replacing magnetic joins with more robust connections; detailed load ratings are not provided.

The speech-to-reality system combines 3D generative AI and robotic assembly to create objects on demand. Watch Video Denise Brehm | MIT Morningside Academy for Design Publication Date : December 5,…

Sources

- MIT researchers “speak objects into existence” using AI and robotics

- MIT Team Builds a Speech-to-Reality System that Turns …

- MIT Researchers Use AI to 'Speak' Objects into Existence

- Natural language meets robotics in MIT's on-demand …

Related posts

- Japanese Team Builds a Chip That Claims to Outsmart You in Rock-Paper-Scissors

- CHIPS Act Program Loses Funding: Digital-Twin Center Contract Ends

- Video Friday: Holiday-themed robotics videos and lab greetings roundup