TL;DR

Nvidia unveiled its Rubin computing architecture at CES, saying the platform is already in production and will scale further later this year. The multi-chip design targets compute, interconnect and storage bottlenecks and is claimed to deliver large speed and efficiency gains over the prior Blackwell generation.

What happened

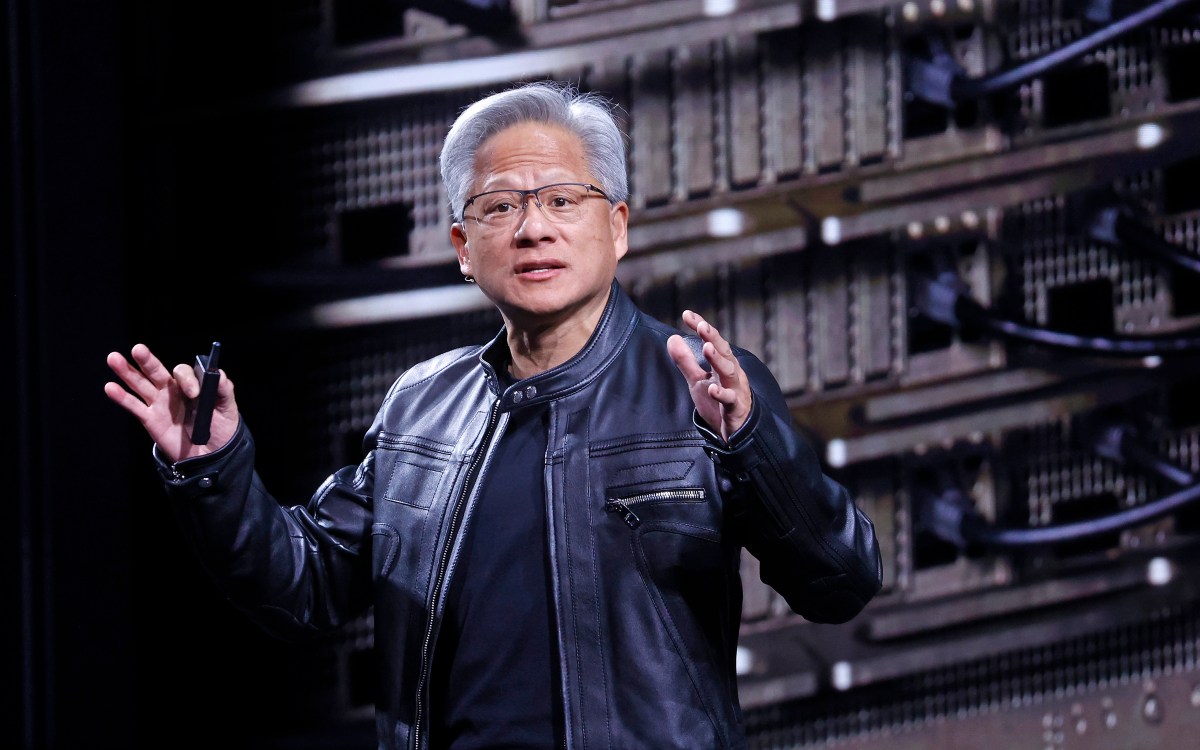

At CES, Nvidia CEO Jensen Huang introduced the Rubin computing architecture, describing it as the company’s newest platform for AI workloads. Rubin is reported to be in production now, with broader ramp-up expected in the second half of the year. The design, first announced in 2024, is a suite of six cooperating chips centered on a Rubin GPU and also includes a Vera CPU intended for agentic reasoning, upgraded Bluefield storage components, and NVLink interconnect improvements. Nvidia says Rubin replaces the Blackwell family and will be deployed by major cloud providers and partners, including Anthropic, OpenAI and AWS, and will power systems such as HPE’s Blue Lion and the Doudna supercomputer at Lawrence Berkeley National Lab. Nvidia’s internal tests claim several-fold improvements in training and inference speed, increased petaflop-class throughput, and much higher inference efficiency per watt. Nvidia executives highlighted a new external storage tier aimed at easing KV cache limits for long-term and agentic workflows.

Why it matters

- Addresses growing compute and memory demands from modern AI models by combining multiple specialized chips.

- If real-world gains match Nvidia’s claims, model training and deployment costs could fall and speed up AI development cycles.

- Prelaunch commitments from major cloud and research partners signal rapid enterprise uptake and continued centralization of AI infrastructure.

- New external storage tier targets KV cache bottlenecks that constrain agentic and long-context AI use cases.

Key facts

- Rubin architecture unveiled by Jensen Huang at CES and said to be in production.

- Rubin was first announced in 2024 and replaces Nvidia’s Blackwell architecture.

- Architecture comprises six cooperating chips, including a Rubin GPU and a new Vera CPU.

- Includes upgrades to Bluefield storage technology and NVLink interconnects to address bottlenecks.

- Nvidia reports Rubin is 3.5x faster than Blackwell on training and 5x faster on inference in its tests.

- Company claims Rubin can reach up to 50 petaflops and deliver eight times more inference compute per watt.

- Rubin systems are slated for use by cloud partners such as Anthropic, OpenAI and AWS, and in HPE’s Blue Lion and LBNL’s Doudna supercomputer.

- Nvidia introduced an external storage tier intended to scale KV cache for long-running or agentic workflows.

- Huang has previously estimated $3–4 trillion could be spent on AI infrastructure over the next five years (from an October 2025 earnings call).

What to watch next

- Independent third-party benchmarks validating Nvidia’s performance and efficiency claims (not confirmed in the source).

- Detailed availability and rollout schedules from cloud providers and OEM partners beyond the stated production and second-half ramp (not confirmed in the source).

- Impact on procurement, pricing and data-center power requirements as Rubin systems enter broader commercial deployment (not confirmed in the source).

Quick glossary

- GPU: Graphics processing unit; a processor optimized for parallel workloads often used to accelerate AI model training and inference.

- KV cache: A key-value cache used by AI models to store condensed context or intermediate data to reduce repeated computation and memory access.

- NVLink: A high-speed interconnect technology used to connect processors and accelerators for fast data transfer between devices.

- Inference: The process of running a trained AI model to generate outputs from new inputs, as opposed to training the model.

- Petaflop: A measure of computing performance equal to one quadrillion (10^15) floating-point operations per second.

Reader FAQ

When was Rubin announced and launched?

Rubin was first announced in 2024 and Nvidia presented its official launch at CES, saying the architecture is in production.

Is Rubin already available?

Nvidia says Rubin is in full production and will ramp further in the second half of the year.

Which companies plan to use Rubin?

The source lists Anthropic, OpenAI, Amazon Web Services, HPE’s Blue Lion, and Lawrence Berkeley National Lab’s Doudna as Rubin users or partners.

How much faster is Rubin compared with Blackwell?

Nvidia’s internal tests claim about 3.5x faster on training and 5x faster on inference, with up to 50 petaflops and eight times more inference compute per watt.

What’s the price and commercial availability schedule for Rubin systems?

not confirmed in the source

Today at the Consumer Electronics show, Nvidia CEO Jensen Huang officially launched the company’s new Rubin computing architecture, which he described as the state of the art in AI hardware….

Sources

- Nvidia launches powerful new Rubin chip architecture

- NVIDIA Unveils Rubin CPX: A New Class of GPU …

- Nvidia reveals Vera Rubin Superchip for the first time

- Nvidia just admitted the general-purpose GPU era is ending

Related posts

- Boston Dynamics and Google DeepMind launch AI partnership for Atlas humanoids

- Boston Dynamics and Google DeepMind team up to add Gemini AI to Atlas robots

- Google DeepMind to Embed Gemini AI in Boston Dynamics’ Atlas Robots