TL;DR

MIT researchers created PhysicsGen, a simulation pipeline that expands a small set of VR demonstrations into thousands of robot-specific training trajectories. Tests on virtual and real robotic hands and arms showed substantial gains in task success and mid-task recovery, and the team plans extensions toward broader task diversity and new data sources.

What happened

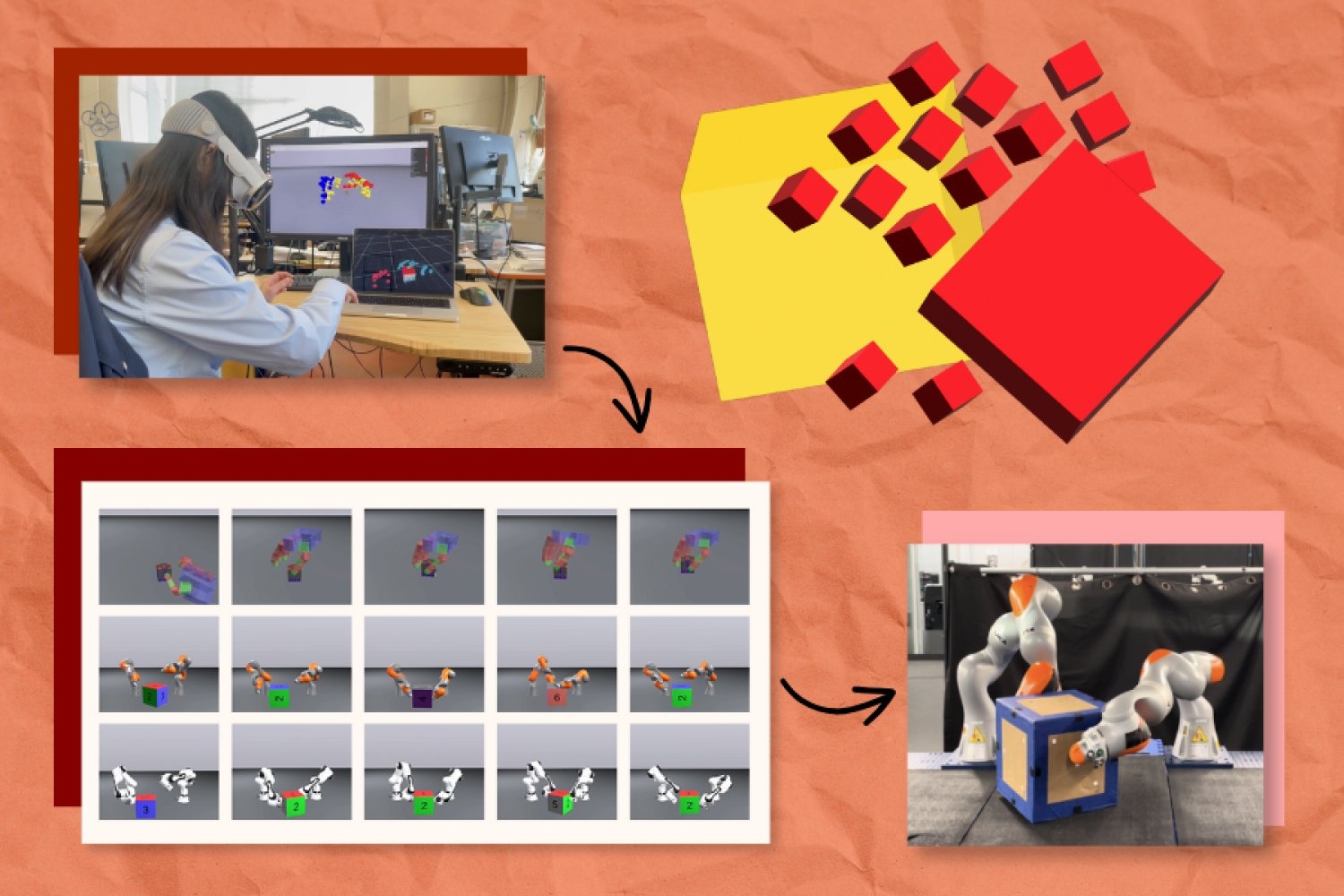

Researchers at MIT’s CSAIL and the Robotics and AI Institute unveiled PhysicsGen, a three-step pipeline that converts human VR demonstrations into large libraries of robot-ready motion trajectories. First, a VR headset captures how a person manipulates an object; those motions are represented in a 3D physics simulator as simplified hand keypoints. Next, the simulated keypoints are remapped onto the kinematic structure of a target machine, matching hand points to robot joints. Finally, trajectory optimization generates many efficient motion variants for the task. PhysicsGen turned a few dozen human demonstrations into nearly 3,000 simulations per machine in some cases. In experiments the system improved a floating robotic hand’s task accuracy to 81 percent (about a 60 percent gain over a human-only baseline), boosted collaboration success for pairs of virtual arms by up to 30 percent, and produced similar performance and recovery behavior in tests with two real robotic arms. The team presented the work at the Robotics: Science and Systems conference.

Why it matters

- Scales a small set of human demonstrations into large, robot-specific training datasets without re-recording per robot.

- Allows robots to try alternative motion plans at runtime, improving recovery when an intended trajectory fails.

- Facilitates reuse of older or cross-robot datasets by remapping demonstrations to new machine configurations.

- Could serve as a component in building larger, shared robotics datasets or foundation models, though that outcome is framed as a longer-term prospect.

Key facts

- PhysicsGen was developed by MIT CSAIL and the Robotics and AI Institute.

- The pipeline multiplies a few dozen VR demonstrations into nearly 3,000 simulated trajectories per machine in reported tests.

- The method uses a three-step process: VR capture of human motion, remapping of keypoints to robot joints, and trajectory optimization to produce feasible motions.

- In one experiment a simulated floating robotic hand achieved 81% task accuracy after training on PhysicsGen data, a roughly 60% improvement over a baseline trained only on human demos.

- PhysicsGen improved coordination for pairs of virtual robot arms by up to 30% compared with a human-only baseline.

- Tests with two real robotic arms showed similar gains and allowed robots to recover mid-task by selecting alternate trajectories from the generated library.

- Authors include Lujie Yang, Hyung Ju 'Terry' Suh, Bernhard Paus Græsdal, and senior author Russ Tedrake; the work was supported by the Robotics and AI Institute and Amazon.

- The researchers presented the paper titled 'Physics-Driven Data Generation for Contact-Rich Manipulation via Trajectory Optimization' at the Robotics: Science and Systems conference.

What to watch next

- Efforts to seed the pipeline with large, unstructured sources (for example, internet videos) to convert visual content into robot-ready data.

- Integration of reinforcement learning and advanced perception to expand the library beyond human-provided examples and allow visual scene interpretation.

- Extensions to more varied robot morphologies and to interactions with soft or deformable objects — the team notes these are more challenging to simulate.

Quick glossary

- Trajectory optimization: A computational method that searches for efficient, feasible motion paths for a robot to accomplish a task while respecting physical constraints.

- Virtual reality (VR) demonstrations: Human-performed task recordings captured in a VR system, often used to show desired object manipulations or gestures for imitation learning.

- Policy: In robotics and machine learning, a policy is a mapping from observed states to actions that a robot executes to accomplish a task.

- Foundation model (robotics context): A large, general-purpose model trained on diverse data intended to provide transferable capabilities across many downstream robotic tasks.

Reader FAQ

What is PhysicsGen?

A simulation pipeline that converts a small number of human VR demonstrations into large sets of robot-specific motion trajectories using remapping and trajectory optimization.

How much human demonstration data is needed?

The team reports turning just a few dozen demonstrations (24 in one experiment) into thousands of simulated trajectories.

Has PhysicsGen been tested on real robots?

Yes; experiments with a pair of real robotic arms showed improved task performance and the ability to recover mid-task using alternate trajectories.

Can PhysicsGen teach robots to handle soft or deformable objects?

The researchers say the pipeline may be extended to soft or deformable items in the future, but simulating those interactions remains difficult.

Is there a public release date or code available?

not confirmed in the source

The PhysicsGen system, developed by MIT researchers, helps robots handle items in homes and factories by tailoring training data to a particular machine. Alex Shipps | MIT CSAIL Publication Date…

Sources

- Simulation-based pipeline tailors training data for dexterous robots

- PhysicsGen Uses Generative AI to Turn a Handful of …

- From The Computer Science & Artificial Intelligence Laboratory …

- Physics-Driven Data Generation for Contact-Rich …

Related posts

- MIT handheld interface lets users teach robots with three demo modes

- Vision-Only Control: MIT’s Neural Jacobian Fields Teach Robots Self-Models

- MIT PhD Student Erik Ballesteros Builds Wearable ‘SuperLimbs’ for Astronauts