TL;DR

Raspberry Pi has released the AI HAT+ 2, a Hailo-10H neural accelerator board with 40 TOPS (INT4) and 8 GB of onboard RAM aimed at running local generative AI and vision models. It connects via the Pi's GPIO/PCIe interface, requires active cooling in practice, and supports several 1.5B-parameter models at launch.

What happened

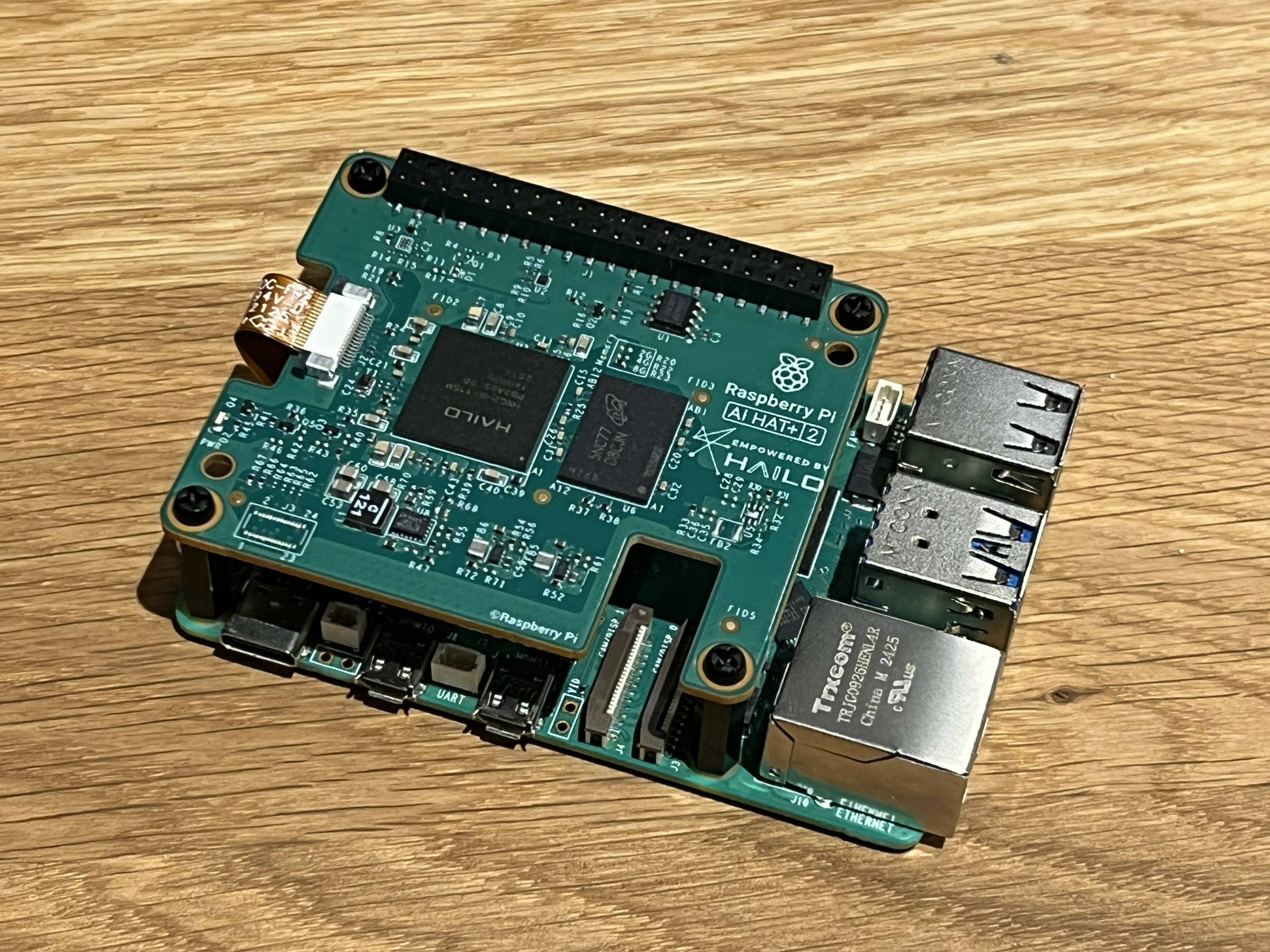

Raspberry Pi introduced the AI HAT+ 2, an upgrade intended to add on-device AI inference capability to the Pi 5. The board uses the Hailo-10H neural network accelerator and advertises 40 TOPS (INT4) for inference workloads, while providing 8 GB of memory on the module to reduce pressure on the host Pi. Installation uses the Pi's GPIO connector and the board communicates over PCIe; Raspberry Pi supplies mounting spacers for fitting alongside the Pi 5’s active cooler and offers a passive heatsink as an option. Setup on Raspberry Pi OS is straightforward and the hardware is supported by rpicam-apps; the reviewer ran local workloads with Docker and a hailo-ollama server using the Qwen2 model without operational issues. Raspberry Pi lists several models as available at launch, most at 1.5 billion parameters, and says larger models will arrive in future updates.

Why it matters

- Provides an on-device accelerator that can offload inference from the host Pi, enabling local LLM and generative AI use cases without constant cloud access.

- Adds dedicated RAM on the HAT, which can ease memory bottlenecks for some workloads that would otherwise tax the Pi's system memory.

- Performance for computer vision remains similar to the previous AI HAT+ (26 TOPS INT4), so the new board’s advantage is primarily focused on LLM and generative tasks.

- Despite the module’s capabilities, model size and memory limits still lag far behind cloud LLM infrastructure, constraining model choice and complexity.

- Thermal and form-factor considerations (the chips run hot and the board requires cooling and specific mounting) affect practical deployment.

Key facts

- Accelerator: Hailo-10H neural network silicon.

- Inference performance: 40 TOPS (INT4) advertised.

- Onboard memory: 8 GB on the AI HAT+ 2 module.

- Computer vision throughput: roughly comparable to previous AI HAT+ at 26 TOPS (INT4).

- Connection: plugs into Raspberry Pi GPIO and communicates over PCIe.

- Cooling: passive heatsink included as optional; reviewer notes an active cooling solution will be necessary.

- Software: native support in rpicam-apps; tested with Docker and a hailo-ollama server running Qwen2.

- Launch model support: DeepSeek-R10-Distill, Llama3.2, Qwen2.5-Coder, Qwen2.5-Instruct, and Qwen2 (most listed models are 1.5B parameters except Llama3.2).

- Price/context: article references a $130 price for AI HAT+ 2 and compares cheaper options (previous AI HAT+ and $70 AI camera) for vision workloads.

- Raspberry Pi offers Pi 5 configurations with up to 16 GB system RAM as an alternative approach to addressing memory needs.

What to watch next

- Whether Raspberry Pi and partners deliver the 'larger models' the company said will arrive in future updates.

- Adoption choices by developers: preference for buying a Pi 5 with more system RAM (for example 16 GB) versus adding the AI HAT+ 2 module — not confirmed in the source.

- Market response for vision-only deployments, given the predecessor AI HAT+ and cheaper AI camera options remain competitive for those use cases.

Quick glossary

- TOPS: Trillions of operations per second, a metric used to quantify raw inference throughput for AI accelerators.

- INT4: A low-precision integer data format (4-bit) used in neural network inference to increase performance and efficiency at the cost of numeric precision.

- LLM: Large language model — a neural network trained on large text corpora to perform language tasks like generation and comprehension.

- PCIe: Peripheral Component Interconnect Express, a high-speed interface standard used to connect expansion hardware to a host computer.

- Neural network accelerator: A dedicated chip designed to speed up machine learning inference by optimizing common neural network operations.

Reader FAQ

Does the AI HAT+ 2 work with Raspberry Pi 5?

Yes — it connects through the Pi's GPIO and communicates via PCIe; the reviewer tested it on an 8 GB Pi 5.

What models are supported at launch?

Raspberry Pi lists DeepSeek-R10-Distill, Llama3.2, Qwen2.5-Coder, Qwen2.5-Instruct, and Qwen2; most of these are 1.5B-parameter models except Llama3.2.

Is the onboard 8 GB RAM enough for serious LLM work?

The article describes 8 GB on the module as helpful but 'a little weedy' for memory-hungry AI workloads and notes buying a Pi 5 with 16 GB is an option.

Is this a clear upgrade for computer vision tasks?

No — computer vision performance is similar to the earlier AI HAT+ at about 26 TOPS (INT4), and the piece suggests the older HAT+ or a $70 AI camera may be better value for vision-only use.

AI + ML Raspberry Pi 5 gets LLM smarts with AI HAT+ 2 40 TOPS of inference grunt, 8 GB onboard memory, and the nagging question: who exactly needs this?…

Sources

- Raspberry Pi 5 gets LLM smarts with AI HAT+ 2

- Gen AI on Your Raspberry Pi: A Hands-On Review of the …

- AI software – Raspberry Pi Documentation

- AI HATs – Raspberry Pi Documentation

Related posts

- OpenAI launches ChatGPT Translate as a direct rival to Google Translate

- Handy: Free Open-Source Offline Speech-to-Text App for macOS, Windows, Linux

- Dylan Araps: Why the Open-Source Developer Says ‘I Have Taken Up Farming’