TL;DR

Signal president Meredith Whittaker and VP Udbhav Tiwari told 39C3 attendees that current agentic AI designs create large, plain‑text local databases and introduce surveillance and malware risks. They also argued agentic workflows are probabilistic and degrade across multiple steps, and urged industry actions including default opt‑out and greater transparency.

What happened

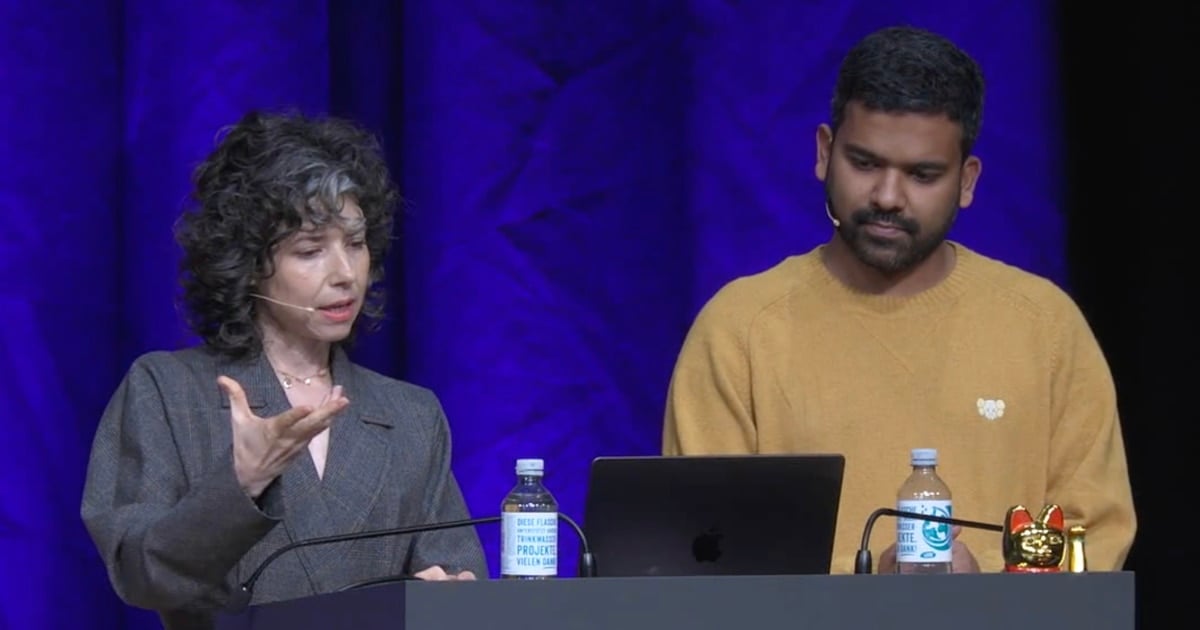

At the 39th Chaos Communication Congress in Hamburg, Signal President Meredith Whittaker and VP Udbhav Tiwari presented a talk titled “AI Agent, AI Spy,” laying out security, privacy and reliability concerns about agentic AI. They described how agentic assistants must gather broad, sensitive context to act autonomously, and warned that some implementations store detailed, searchable records of user activity on devices. As an example, they cited Microsoft’s Recall for Windows 11, which captures periodic screenshots, runs OCR and semantic analysis, and compiles a timeline and topical metadata into a local database. Tiwari argued those local repositories can be exposed by malware or by hidden prompt‑injection attacks, undermining end‑to‑end encryption protections; Signal has added a flag in its app to block being recorded but the leaders said that is not an adequate long‑term fix. Whittaker also highlighted that agentic systems are probabilistic: accuracy compounds across steps, producing far lower success rates for multi‑step tasks. They urged the industry to halt unsafe deployments and implement opt‑out defaults and auditable transparency.

Why it matters

- Local databases created for agentic assistants can hold extensive, sensitive records of user activity, raising new attack surfaces for malware and exploitation.

- Signal’s leaders say these designs can defeat end‑to‑end encryption guarantees by exposing plaintext data accessible to other processes or attackers.

- Probabilistic behavior of AI agents means multi‑step tasks can fail at high rates, potentially causing harmful or erroneous autonomous actions.

- Widespread deployment without stronger safeguards risks eroding consumer trust in agentic AI and could prompt regulatory or market pushback.

Key facts

- Presentation delivered by Meredith Whittaker (Signal President) and Udbhav Tiwari (Signal VP) at 39C3 in Hamburg.

- Agentic AI requires access to contextual and sensitive data to perform tasks autonomously, per the talk.

- Microsoft’s Recall for Windows 11 was cited as an example: it periodically screenshots the screen, OCRs text, and builds a searchable local database.

- The Recall database reportedly includes timelines, raw OCR text, dwell time, app focus and topical assignments for activities.

- Tiwari warned that local databases can be accessed by malware or through hidden prompt‑injection attacks, potentially bypassing E2EE protections.

- Signal added an app flag intended to prevent its screen from being recorded; Signal’s leaders said this is not a robust long‑term solution.

- Whittaker emphasized agentic AI is probabilistic; she provided examples showing accuracy compounds across steps (e.g., 95% per‑step yields ~59.9% for 10 steps, ~21.4% for 30 steps).

- Using a 90% per‑step accuracy model for a 30‑step task would drop success to roughly 4.2%, and Signal leaders said top agent models still fail often.

- Recommended mitigations included halting reckless deployments, making opt‑out the default with mandatory developer opt‑ins, and demanding granular, auditable transparency.

What to watch next

- Whether major OS and software vendors revise designs like Recall to limit local plain‑text aggregation of user activity (not confirmed in the source).

- Industry moves toward default opt‑out settings and mandatory developer opt‑ins for agentic features (not confirmed in the source).

- Regulatory responses or standards addressing storage of local user activity and access controls for agentic systems (not confirmed in the source).

- Security fixes or architectural changes that prevent local agent databases from being readable by other processes or malware (not confirmed in the source).

Quick glossary

- Agentic AI: AI systems designed to act autonomously on behalf of a user, performing multi‑step tasks and making decisions with varying degrees of independence.

- End‑to‑end encryption (E2EE): A method of secure communication where only the communicating users can read the messages, intended to prevent intermediaries from accessing plaintext content.

- OCR (Optical Character Recognition): Technology that converts images of text into machine‑readable text, often used to extract content from screenshots or scanned documents.

- Prompt injection: An attack that manipulates the inputs or context given to an AI model to change its behavior or exfiltrate data.

Reader FAQ

Did Signal say agentic AI is inherently unsafe?

Signal leaders warned current implementations are insecure and unreliable, and said there is no comprehensive solution today—only triage and mitigations.

What is Microsoft Recall and why was it mentioned?

The presentation cited Recall as a feature that takes frequent screenshots, extracts text via OCR, analyzes context and stores a detailed local database of user activity.

Has Signal fully blocked being recorded by agent features?

Signal added a flag to its app to prevent its screen from being recorded, but the speakers said that measure is not a reliable long‑term fix.

Are there proven technical fixes to make agentic AI private and secure?

According to the talk, no comprehensive solution currently exists; the speakers recommended stopping risky deployments, default opt‑out, and radical transparency.

‘Signal’ President and VP warn agentic AI is insecure, unreliable, and a surveillance nightmare With agentic AI embedded at the OS level, databases storing entire digital lives accessible to malware,…

Sources

- Signal leaders warn agentic AI is an insecure, unreliable surveillance risk

- Signal leaders warn agentic AI is an insecure, unreliable …

- AI Agent, AI Spy

- Hacker News

Related posts

- FCC lets Verizon extend phone locking, moving to one-year unlocking policy

- 118 hours offline: 90 million people cut off as a nation vanishes online

- House backs law to block China from remote access to US export GPUs