TL;DR

Vendor claims of 55–90% developer productivity boosts clash with independent research showing little or negative effects for most teams. Benefits are concentrated in AI-native startups, greenfield projects and routine tasks; legacy systems, integration costs and a learning curve blunt gains for the majority.

What happened

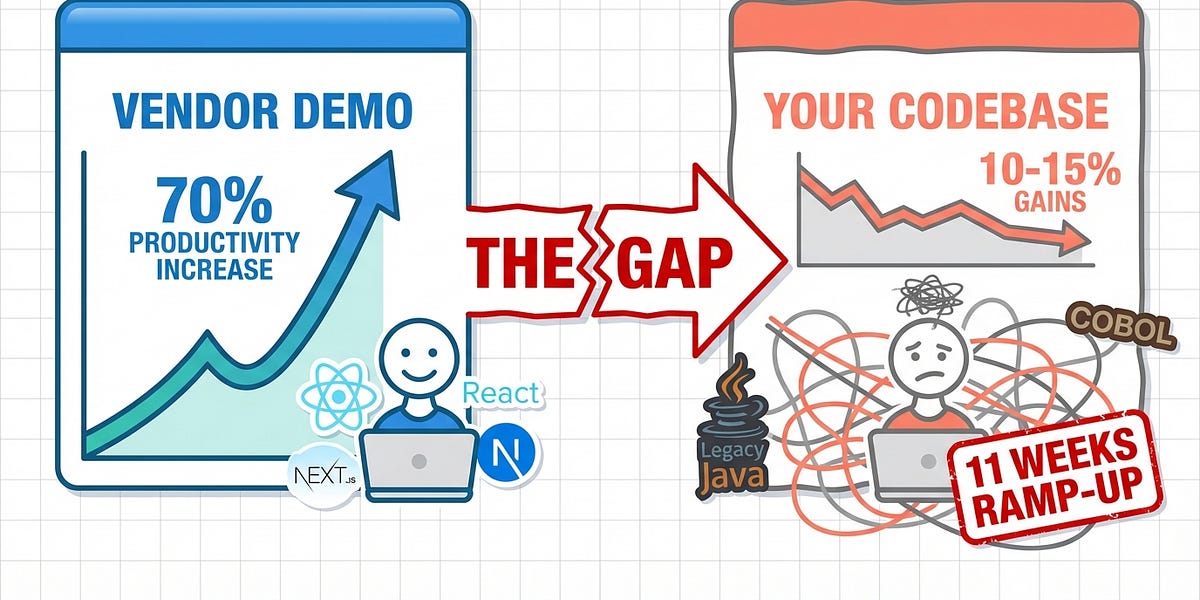

Vendors and some corporate reports have promoted large developer productivity lifts from AI tools, citing figures such as 55% (GitHub), 20–30% (Microsoft) and time savings of 40–60 minutes per day (OpenAI). Independent work paints a different picture: a randomized controlled trial by METR found experienced developers using AI tools took 19% longer to finish tasks than peers without AI. Surveys also show mixed sentiment — roughly half of developers report positive impact while many distrust AI outputs and cite “almost right” results that require extra debugging. The sharpest gains appear in limited contexts: AI-native firms, greenfield projects, boilerplate-heavy work and early-career engineers. For most enterprises, entrenched legacy systems, integration friction, an AI fluency learning period and human trust issues create a sizable gap between vendor demos and production outcomes. Analysts recommend realistic pilots, outcome-based metrics and budgeted ramp-up time before expecting large returns.

Why it matters

- Decision-makers risk overestimating productivity and making hiring or budget changes based on vendor demos rather than real results.

- Enterprises with legacy codebases and complex domains are unlikely to capture the high-end gains seen in AI-native environments without costly modernization.

- Perception gaps — developers believing they sped up despite measurable slowdowns — can mask regressions in cycle time and quality.

- ROI timelines extend beyond individual adoption: expect an organization-level payback that can take months and requires deliberate integration and training.

Key facts

- METR (Model Evaluation & Threat Research) randomized controlled study: experienced developers using AI tools were 19% slower on tasks than those without AI.

- Vendor and corporate claims cited in the piece include GitHub Copilot (55% faster), Microsoft (20–30% improvements) and OpenAI (40–60 minutes saved per day).

- Stack Overflow 2025 survey: 52% of developers report some positive productivity impact from AI tools; 46% now distrust AI output accuracy (up from 31% the prior year).

- Top frustration: 66% of developers cited AI suggestions that are “almost right, but not quite,” creating extra debugging work.

- BairesDev reported developers spend nearly 4 hours per week on AI-related upskilling; Microsoft research cited an 11-week period for developers to fully realize productivity gains from AI coding tools.

- Analyst estimates cited: Bain found roughly 10–15% productivity improvement with AI assistants in practice; McKinsey reported broader operational cost savings of 5–20%.

- Industry research referenced: organizations may spend up to 80% of IT budgets on maintaining outdated systems and over 70% of digital transformation efforts stall due to legacy bottlenecks.

- Reported high-gain contexts: AI-native startups, greenfield projects, boilerplate-heavy tasks, and early-career developers; one high-growth SaaS CTO reported moving toward 90% AI-generated code in their stack (as reported to Menlo Ventures).

- Adoption of advanced AI agents is uneven: about 48% of developers use agents or advanced tooling, while the remainder rely on simpler tools or have no plans to adopt agents.

What to watch next

- Real-world pilot results on your actual codebases rather than vendor demos — track time-to-value and defect rates.

- Ramp-up and training metrics: Microsoft research indicates an ~11-week individual learning period and analyst guidance suggests ~11–13 months for organizational ROI.

- Adoption and trust signals among engineers (e.g., percentage accepting AI suggestions, reported distrust rates and defect trends).

- not confirmed in the source

Quick glossary

- AI assistant / coding tool: Software that uses machine learning models to suggest, generate or review code and documentation for developers.

- Hallucination: When a generative model produces plausible-looking but incorrect or fabricated output.

- Greenfield project: A new development effort started from scratch, without constraints from legacy systems or prior architecture.

- Legacy system: Older software or infrastructure still in use that can be costly or difficult to modify and that may not interoperate well with modern tools.

- Ramp-up period: The initial learning and adaptation phase during which users become proficient with new tools and before full productivity benefits are realized.

Reader FAQ

Do AI tools actually deliver 70–90% developer productivity gains?

Not generally. The article argues those levels appear real for a small fraction (about 10%) of teams in specific contexts; most organizations see much smaller gains.

How long before teams typically see benefits from AI coding tools?

Microsoft research cited in the piece suggests developers may need about 11 weeks to fully realize personal productivity gains; organizational ROI is estimated at roughly 11–13 months.

Are experienced developers helped by AI tools?

According to the METR randomized study referenced, experienced developers in that trial were 19% slower when using AI tools on familiar codebases.

Will AI replace developers soon?

not confirmed in the source

How should leaders measure AI impact?

The source recommends outcome-focused metrics such as cycle time, defect rates and developer satisfaction rather than adoption rates or lines of generated code.

Discover more from The Technical Executive For CTOs, VPs, and senior engineers thinking beyond the code. Subscribe By subscribing, I agree to Substack's Terms of Use, and acknowledge its Information…

Sources

- The 70% AI productivity myth: why most companies aren't seeing the gains

- MIT: Why 95% of Enterprise AI Investments Fail to Deliver

- Generative AI's Productivity Myth

- The GenAI Divide: State of AI in Business 2025

Related posts

- Groq Investor Warns of Data-Center Bubble as Unleased Builds Rise

- Google Gemini is awesome, but it needs to copy these features from ChatGPT

- Non-Zero-Sum Games: Mapping Cooperation Across Theory, Nature, Ethics, and AI