TL;DR

MIT CSAIL researchers developed Neural Jacobian Fields (NJF), a vision-based system that lets robots learn their own geometry and motion responses from visual observation rather than built-in sensors or hand-designed models. After multi-camera training using random motions, a single monocular camera can run closed-loop control in real time for different robot types, though the method currently needs per-robot training and lacks force sensing.

What happened

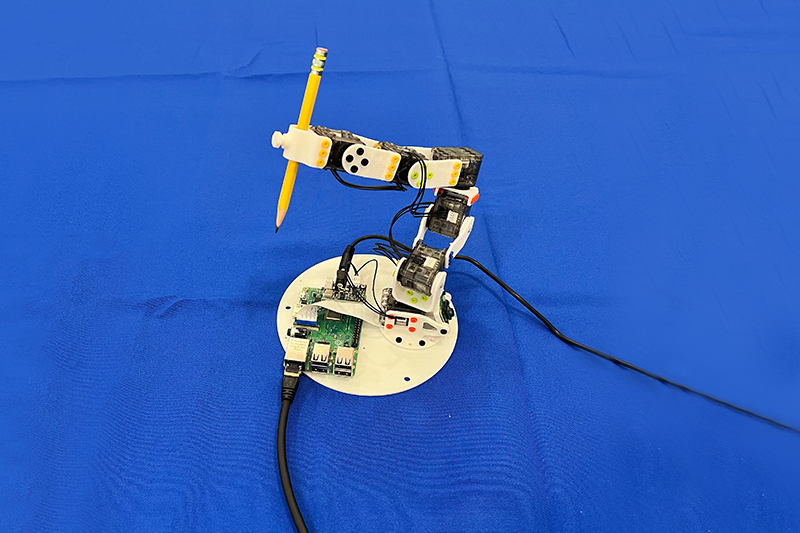

Researchers at MIT CSAIL introduced Neural Jacobian Fields (NJF), a learning-based approach that infers a robot’s 3D shape and how points on its body respond to motor commands using only visual data. During training, a robot executes random motions while multiple cameras capture images; a neural model extends ideas from neural radiance fields to jointly reconstruct geometry and a Jacobian field that predicts local motion in response to controls. After training, the system operates with a single monocular camera for closed-loop control at roughly 12 Hz. The team demonstrated the method on diverse platforms — a pneumatic soft hand, a rigid Allegro hand, a 3D-printed arm and a rotating platform — and showed the model can discover which actuators affect which parts of the body without human labeling. The work was published in Nature and highlights prospects for controlling soft and unconventional robots without embedded sensors.

Why it matters

- Reduces dependency on embedded sensors and hand-crafted physical models, lowering hardware and engineering costs.

- Enables control of soft, deformable, or irregular robots that are difficult to model analytically.

- Uses accessible visual inputs, opening possibilities for field robots that operate without external tracking or GPS.

- Runs in near real time (≈12 Hz), making it more practical than many computation-heavy simulators for soft bodies.

Key facts

- The system is called Neural Jacobian Fields (NJF) and was developed at MIT CSAIL.

- NJF learns both 3D geometry and a Jacobian field that predicts local motion from motor commands.

- Training uses random robot motions captured by multiple cameras; no prior model or supervision is required.

- After training, a single monocular camera suffices for closed-loop control at about 12 Hz.

- Tested platforms include a pneumatic soft hand, a rigid Allegro hand, a 3D-printed robotic arm, and a rotating platform.

- The paper was published open-access in Nature on June 25 (paper title: 'Controlling diverse robots by inferring Jacobian fields with deep networks').

- Current limitations: the method must be retrained per robot and lacks force or tactile sensing, constraining contact-rich tasks.

- Researchers are exploring improvements on generalization, occlusion handling, and longer spatial/temporal reasoning.

What to watch next

- Work on improving cross-robot generalization so models trained on one platform transfer to others.

- Efforts to integrate force or tactile information or to combine visual and contact sensing to handle contact-rich tasks.

- Techniques for robust operation with occlusions or sparser visual data, and reducing multi-camera training requirements.

- Translation of the phone-recording idea into user-friendly pipelines for hobbyists and field deployment.

Quick glossary

- Neural Jacobian Field (NJF): A neural model that jointly represents a robot’s 3D shape and a field predicting how local points move in response to control inputs.

- Jacobian: A mathematical object that maps small changes in control inputs (like motor commands) to resulting changes in a system’s configuration or position.

- Neural Radiance Field (NeRF): A learned scene representation that maps spatial coordinates to color and density, enabling 3D reconstruction from images.

- Closed-loop control: A control strategy that continuously observes a system’s state and adjusts actions in real time to achieve a goal.

- Monocular camera: A single-lens camera that captures 2D images; when combined with learned models, it can be used for 3D perception and control.

Reader FAQ

Does NJF require onboard sensors?

No — the demonstrated systems operated without embedded sensors; control is based on visual observation.

How many cameras are needed?

Training used multiple cameras, but after training the robot can be controlled with one monocular camera.

Can a single trained model control multiple different robots?

Not confirmed in the source.

Can NJF handle tasks that require force or touch sensing?

The source states the method currently lacks force or tactile sensing, which limits contact-rich tasks.

Neural Jacobian Fields, developed by MIT CSAIL researchers, can learn to control any robot from a single camera, without any other sensors. Rachel Gordon | MIT CSAIL Publication Date :…

Sources

- Robot, know thyself: New vision-based system teaches machines to understand their bodies

- MIT Teaches Soft Robots Body Awareness Through AI And …

- Controlling diverse robots by inferring Jacobian fields with …

Related posts

- MIT PhD Student Erik Ballesteros Builds Wearable ‘SuperLimbs’ for Astronauts

- MIT symposium explores how generative AI might evolve and be governed

- New MIT framework incorporates component uncertainty into system design